Dinesh K. Vishwakarma

Covariate conscious approach for Gait recognition based upon Zernike moment invariants

Nov 21, 2016

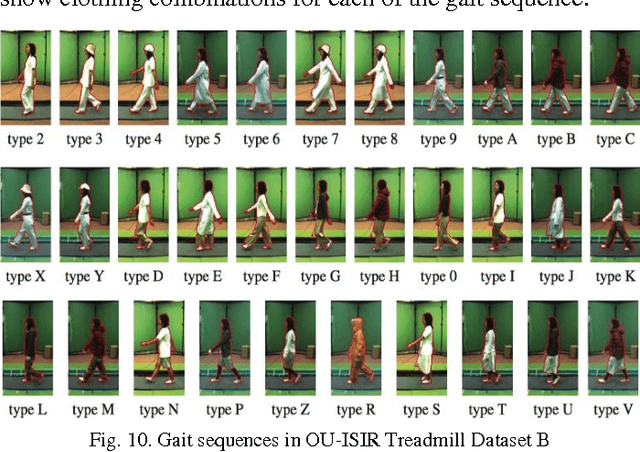

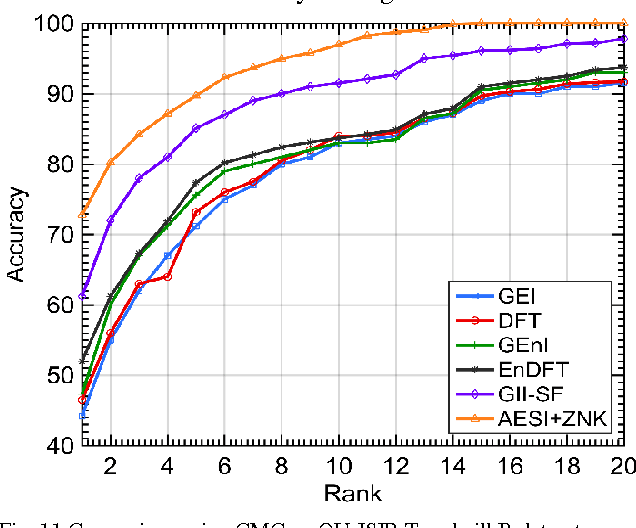

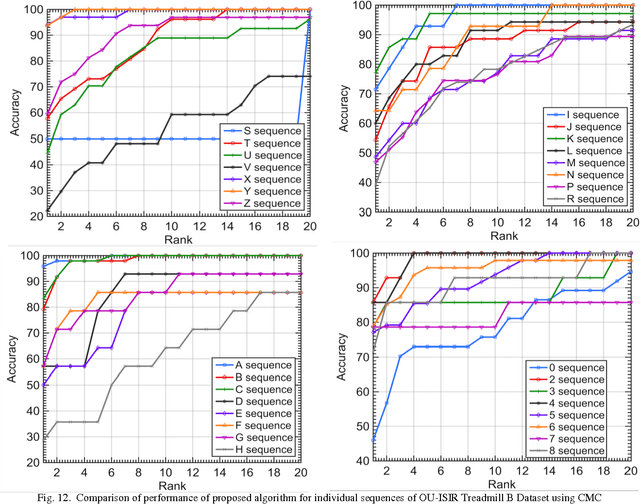

Abstract:Gait recognition i.e. identification of an individual from his/her walking pattern is an emerging field. While existing gait recognition techniques perform satisfactorily in normal walking conditions, there performance tend to suffer drastically with variations in clothing and carrying conditions. In this work, we propose a novel covariate cognizant framework to deal with the presence of such covariates. We describe gait motion by forming a single 2D spatio-temporal template from video sequence, called Average Energy Silhouette image (AESI). Zernike moment invariants (ZMIs) are then computed to screen the parts of AESI infected with covariates. Following this, features are extracted from Spatial Distribution of Oriented Gradients (SDOGs) and novel Mean of Directional Pixels (MDPs) methods. The obtained features are fused together to form the final well-endowed feature set. Experimental evaluation of the proposed framework on three publicly available datasets i.e. CASIA dataset B, OU-ISIR Treadmill dataset B and USF Human-ID challenge dataset with recently published gait recognition approaches, prove its superior performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge