Die Luo

ParamNet: A Parameter-variable Network for Fast Stain Normalization

May 11, 2023

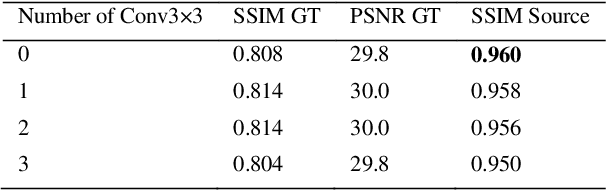

Abstract:In practice, digital pathology images are often affected by various factors, resulting in very large differences in color and brightness. Stain normalization can effectively reduce the differences in color and brightness of digital pathology images, thus improving the performance of computer-aided diagnostic systems. Conventional stain normalization methods rely on one or several reference images, but one or several images are difficult to represent the entire dataset. Although learning-based stain normalization methods are a general approach, they use complex deep networks, which not only greatly reduce computational efficiency, but also risk introducing artifacts. StainNet is a fast and robust stain normalization network, but it has not a sufficient capability for complex stain normalization due to its too simple network structure. In this study, we proposed a parameter-variable stain normalization network, ParamNet. ParamNet contains a parameter prediction sub-network and a color mapping sub-network, where the parameter prediction sub-network can automatically determine the appropriate parameters for the color mapping sub-network according to each input image. The feature of parameter variable ensures that our network has a sufficient capability for various stain normalization tasks. The color mapping sub-network is a fully 1x1 convolutional network with a total of 59 variable parameters, which allows our network to be extremely computationally efficient and does not introduce artifacts. The results on cytopathology and histopathology datasets show that our ParamNet outperforms state-of-the-art methods and can effectively improve the generalization of classifiers on pathology diagnosis tasks. The code has been available at https://github.com/khtao/ParamNet.

StainNet: a fast and robust stain normalization network

Jan 16, 2021

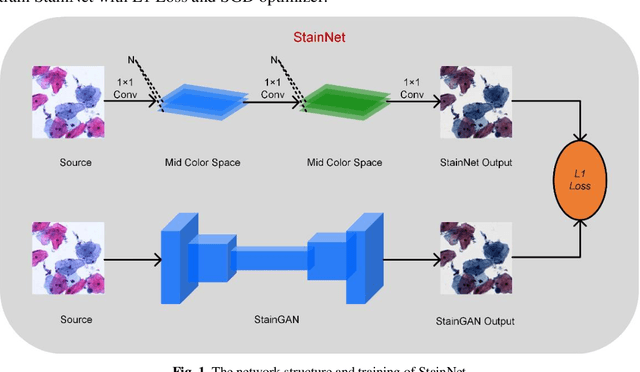

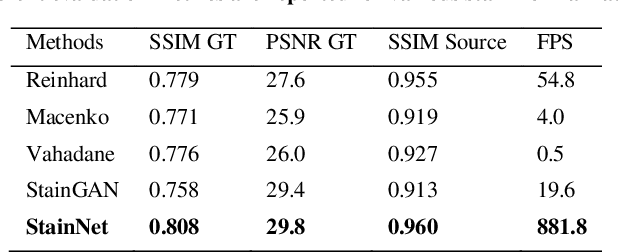

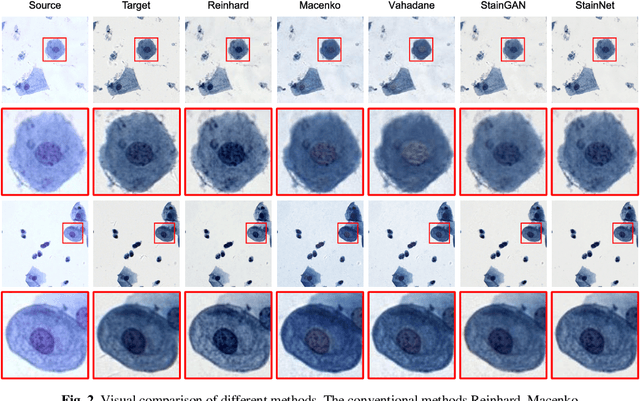

Abstract:Due to a variety of factors, pathological images have large color variabilities, which hamper the performance of computer-aided diagnosis (CAD) systems. Stain normalization has been used to reduce the color variability and increase the accuracy of CAD systems. Among them, the conventional methods perform stain normalization on a pixel-by-pixel basis, but estimate stain parameters just relying on one single reference image and thus would incur some inaccurate normalization results. As for the current deep learning-based methods, it can automatically extract the color distribution and need not pick a representative reference image. While the deep learning-based methods have a complex structure with millions of parameters, and a relatively low computational efficiency and a risk to introduce artifacts. In this paper, a fast and robust stain normalization network with only 1.28K parameters named StainNet is proposed. StainNet can learn the color mapping relationship from a whole dataset and adjust the color value in a pixel-to-pixel manner. The proposed method performs well in stain normalization and achieves a better accuracy and image quality. Application results show the cervical cytology classification achieved a higher accuracy when after stain normalization of StainNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge