Dayeol Lee

Privacy-Preserving Decentralized AI with Confidential Computing

Oct 17, 2024

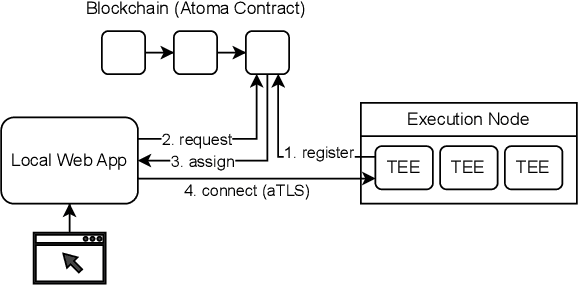

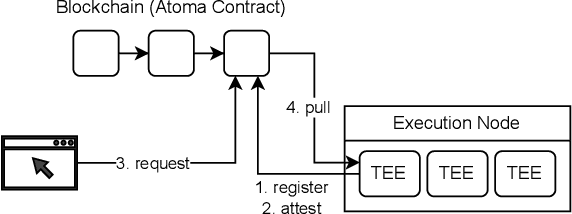

Abstract:This paper addresses privacy protection in decentralized Artificial Intelligence (AI) using Confidential Computing (CC) within the Atoma Network, a decentralized AI platform designed for the Web3 domain. Decentralized AI distributes AI services among multiple entities without centralized oversight, fostering transparency and robustness. However, this structure introduces significant privacy challenges, as sensitive assets such as proprietary models and personal data may be exposed to untrusted participants. Cryptography-based privacy protection techniques such as zero-knowledge machine learning (zkML) suffers prohibitive computational overhead. To address the limitation, we propose leveraging Confidential Computing (CC). Confidential Computing leverages hardware-based Trusted Execution Environments (TEEs) to provide isolation for processing sensitive data, ensuring that both model parameters and user data remain secure, even in decentralized, potentially untrusted environments. While TEEs face a few limitations, we believe they can bridge the privacy gap in decentralized AI. We explore how we can integrate TEEs into Atoma's decentralized framework.

Privacy-Preserving Machine Learning in Untrusted Clouds Made Simple

Sep 09, 2020

Abstract:We present a practical framework to deploy privacy-preserving machine learning (PPML) applications in untrusted clouds based on a trusted execution environment (TEE). Specifically, we shield unmodified PyTorch ML applications by running them in Intel SGX enclaves with encrypted model parameters and encrypted input data to protect the confidentiality and integrity of these secrets at rest and during runtime. We use the open-source Graphene library OS with transparent file encryption and SGX-based remote attestation to minimize porting effort and seamlessly provide file protection and attestation. Our approach is completely transparent to the machine learning application: the developer and the end-user do not need to modify the ML application in any way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge