Davide Valsecchi

One flow to correct them all: improving simulations in high-energy physics with a single normalising flow and a switch

Mar 27, 2024Abstract:Simulated events are key ingredients in almost all high-energy physics analyses. However, imperfections in the simulation can lead to sizeable differences between the observed data and simulated events. The effects of such mismodelling on relevant observables must be corrected either effectively via scale factors, with weights or by modifying the distributions of the observables and their correlations. We introduce a correction method that transforms one multidimensional distribution (simulation) into another one (data) using a simple architecture based on a single normalising flow with a boolean condition. We demonstrate the effectiveness of the method on a physics-inspired toy dataset with non-trivial mismodelling of several observables and their correlations.

Deep learning techniques for energy clustering in the CMS ECAL

Apr 21, 2022

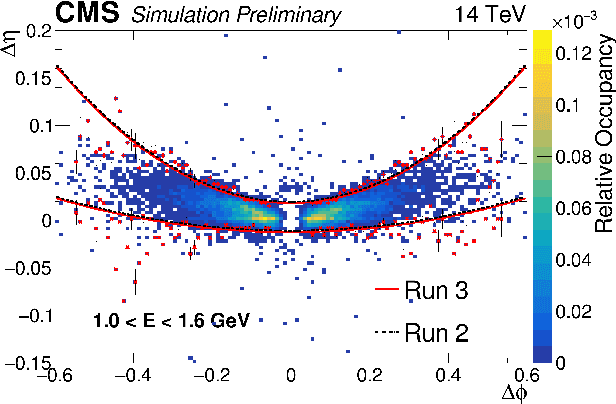

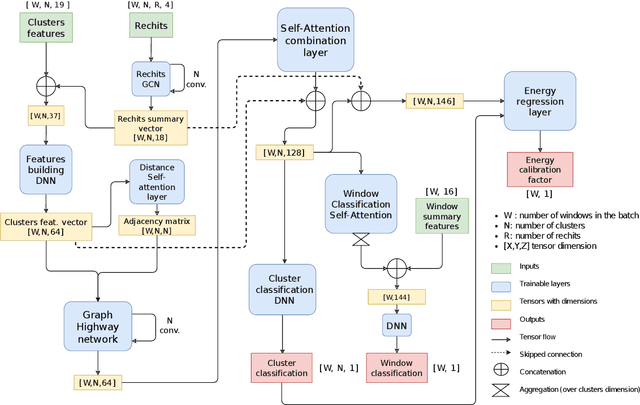

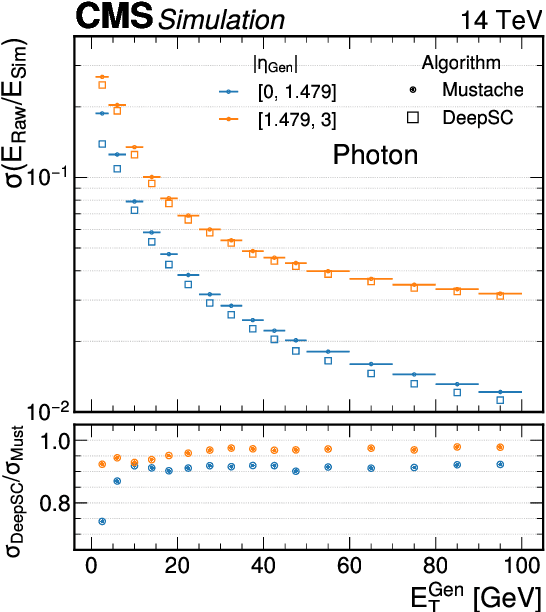

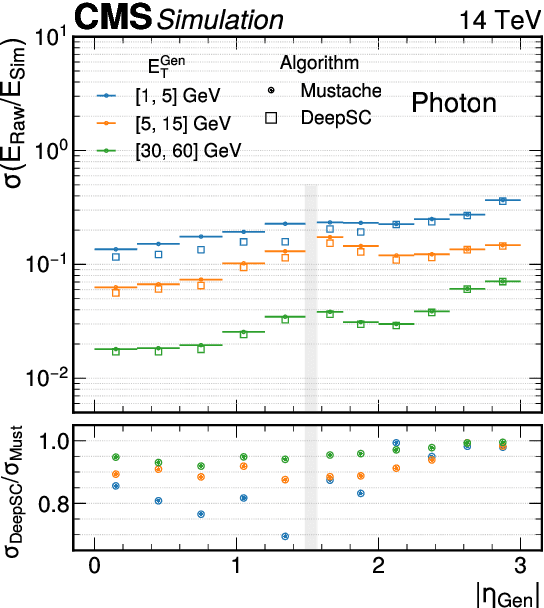

Abstract:The reconstruction of electrons and photons in CMS depends on topological clustering of the energy deposited by an incident particle in different crystals of the electromagnetic calorimeter (ECAL). These clusters are formed by aggregating neighbouring crystals according to the expected topology of an electromagnetic shower in the ECAL. The presence of upstream material (beampipe, tracker and support structures) causes electrons and photons to start showering before reaching the calorimeter. This effect, combined with the 3.8T CMS magnetic field, leads to energy being spread in several clusters around the primary one. It is essential to recover the energy contained in these satellite clusters in order to achieve the best possible energy resolution for physics analyses. Historically satellite clusters have been associated to the primary cluster using a purely topological algorithm which does not attempt to remove spurious energy deposits from additional pileup interactions (PU). The performance of this algorithm is expected to degrade during LHC Run 3 (2022+) because of the larger average PU levels and the increasing levels of noise due to the ageing of the ECAL detector. New methods are being investigated that exploit state-of-the-art deep learning architectures like Graph Neural Networks (GNN) and self-attention algorithms. These more sophisticated models improve the energy collection and are more resilient to PU and noise, helping to preserve the electron and photon energy resolution achieved during LHC Runs 1 and 2. This work will cover the challenges of training the models as well the opportunity that this new approach offers to unify the ECAL energy measurement with the particle identification steps used in the global CMS photon and electron reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge