Ciaran Evans

Sequential changepoint detection for label shift in classification

Sep 18, 2020

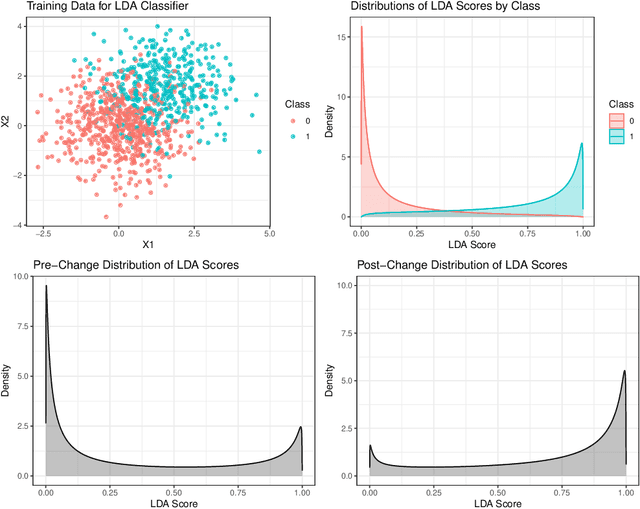

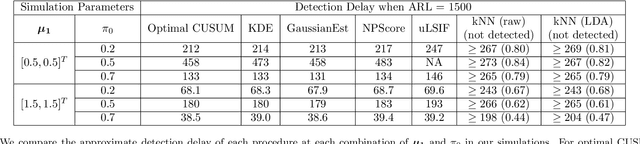

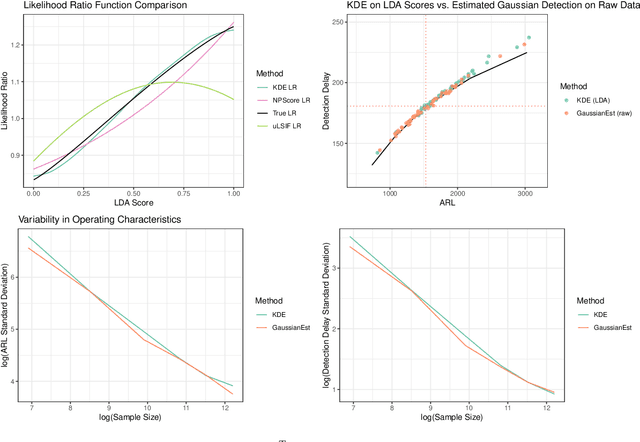

Abstract:Classifier predictions often rely on the assumption that new observations come from the same distribution as training data. When the underlying distribution changes, so does the optimal classifier rule, and predictions may no longer be valid. We consider the problem of detecting a change to the overall fraction of positive cases, known as label shift, in sequentially-observed binary classification data. We reduce this problem to the problem of detecting a change in the one-dimensional classifier scores, which allows us to develop simple nonparametric sequential changepoint detection procedures. Our procedures leverage classifier training data to estimate the detection statistic, and converge to their parametric counterparts in the size of the training data. In simulations, we show that our method compares favorably to other detection procedures in the label shift setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge