Cem Bozsahin

Middle East Technical University, Ankara

Linguistic Analysis, Description, and Typological Exploration with Categorial Grammar (TheBench Guide)

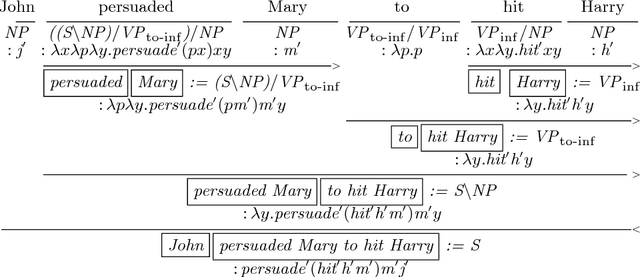

Jun 04, 2024Abstract:TheBench is a tool to study monadic structures in natural language. It is for writing monadic grammars to explore analyses, compare diverse languages through their categories, and to train models of grammar from form-meaning pairs where syntax is latent variable. Monadic structures are binary combinations of elements that employ semantics of composition only. TheBench is essentially old-school categorial grammar to syntacticize the idea, with the implication that although syntax is autonomous (recall \emph{colorless green ideas sleep furiously}), the treasure is in the baggage it carries at every step, viz. semantics, more narrowly, predicate-argument structures indicating choice of categorial reference and its consequent placeholders for decision in such structures. There is some new thought in old school. Unlike traditional categorial grammars, application is turned into composition in monadic analysis. Moreover, every correspondence requires specifying two command relations, one on syntactic command and the other on semantic command. A monadic grammar of TheBench contains only synthetic elements (called `objects' in category theory of mathematics) that are shaped by this analytic invariant, viz. composition. Both ingredients (command relations) of any analytic step must therefore be functions (`arrows' in category theory). TheBench is one implementation of the idea for iterative development of such functions along with grammar of synthetic elements.

Mobile Sequencers

May 09, 2024Abstract:The article is an attempt to contribute to explorations of a common origin for language and planned-collaborative action. It gives `semantics of change' the central stage in the synthesis, from its history and recordkeeping to its development, its syntax, delivery and reception, including substratal aspects. It is suggested that to arrive at a common core, linguistic semantics must be understood as studying through syntax mobile agent's representing, tracking and coping with change and no change. Semantics of actions can be conceived the same way, but through plans instead of syntax. The key point is the following: Sequencing itself, of words and action sequences, brings in more structural interpretation to the sequence than which is immediately evident from the sequents themselves. Mobile sequencers can be understood as subjects structuring reporting, understanding and keeping track of change and no change. The idea invites rethinking of the notion of category, both in language and in planning. Understanding understanding change by mobile agents is suggested to be about human extended practice, not extended-human practice. That's why linguistics is as important as computer science in the synthesis. It must rely on representational history of acts, thoughts and expressions, personal and public, crosscutting overtness and covertness of these phenomena. It has implication for anthropology in the extended practice, which is covered briefly.

Paracompositionality, MWEs and Argument Substitution

May 22, 2018

Abstract:Multi-word expressions, verb-particle constructions, idiomatically combining phrases, and phrasal idioms have something in common: not all of their elements contribute to the argument structure of the predicate implicated by the expression. Radically lexicalized theories of grammar that avoid string-, term-, logical form-, and tree-writing, and categorial grammars that avoid wrap operation, make predictions about the categories involved in verb-particles and phrasal idioms. They may require singleton types, which can only substitute for one value, not just for one kind of value. These types are asymmetric: they can be arguments only. They also narrowly constrain the kind of semantic value that can correspond to such syntactic categories. Idiomatically combining phrases do not subcategorize for singleton types, and they exploit another locally computable and compositional property of a correspondence, that every syntactic expression can project its head word. Such MWEs can be seen as empirically realized categorial possibilities, rather than lacuna in a theory of lexicalizable syntactic categories.

Deriving the Predicate-Argument Structure for a Free Word Order Language

Aug 20, 1998Abstract:In relatively free word order languages, grammatical functions are intricately related to case marking. Assuming an ordered representation of the predicate-argument structure, this work proposes a Combinatory Categorial Grammar formulation of relating surface case cues to categories and types for correctly placing the arguments in the predicate-argument structure. This is achieved by assigning case markers GF-encoding categories. Unlike other CG formulations, type shifting does not proliferate or cause spurious ambiguity. Categories of all argument-encoding grammatical functions follow from the same principle of category assignment. Normal order evaluation of the combinatory form reveals the predicate-argument structure. Application of the method to Turkish is shown.

* 7 pages LaTeX; uses {times,avm,lingmacros,tree-dvips}.sty; minor corrections in the abstract

Morphological Productivity in the Lexicon

Aug 23, 1996

Abstract:In this paper we outline a lexical organization for Turkish that makes use of lexical rules for inflections, derivations, and lexical category changes to control the proliferation of lexical entries. Lexical rules handle changes in grammatical roles, enforce type constraints, and control the mapping of subcategorization frames in valency-changing operations. A lexical inheritance hierarchy facilitates the enforcement of type constraints. Semantic compositions in inflections and derivations are constrained by the properties of the terms and predicates. The design has been tested as part of a HPSG grammar for Turkish. In terms of performance, run-time execution of the rules seems to be a far better alternative than pre-compilation. The latter causes exponential growth in the lexicon due to intensive use of inflections and derivations in Turkish.

* 10 pages LaTeX, {lingmacros,avm,psfig}.sty, 1 figure, 1 bibtex file

A Categorial Framework for Composition in Multiple Linguistic Domains

Jun 29, 1995

Abstract:This paper describes a computational framework for a grammar architecture in which different linguistic domains such as morphology, syntax, and semantics are treated not as separate components but compositional domains. Word and phrase formation are modeled as uniform processes contributing to the derivation of the semantic form. The morpheme, as well as the lexeme, has lexical representation in the form of semantic content, tactical constraints, and phonological realization. The motivation for this work is to handle morphology-syntax interaction (e.g., valency change in causatives, subcategorization imposed by case-marking affixes) in an incremental way. The model is based on Combinatory Categorial Grammars.

* 7 pages LaTeX, CSNLP-95 (Dublin), uses {a4,avm,lingmacros}.sty

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge