Byung Hyung Kim

Improved explanatory efficacy on human affect and workload through interactive process in artificial intelligence

Dec 13, 2019

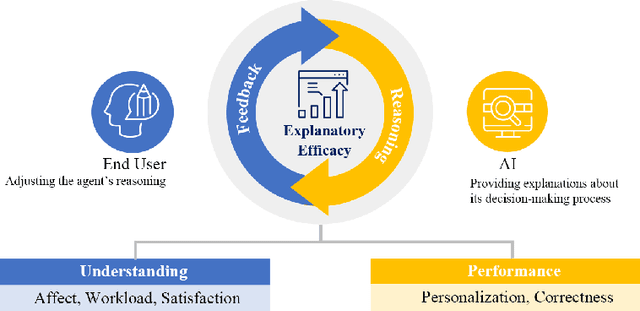

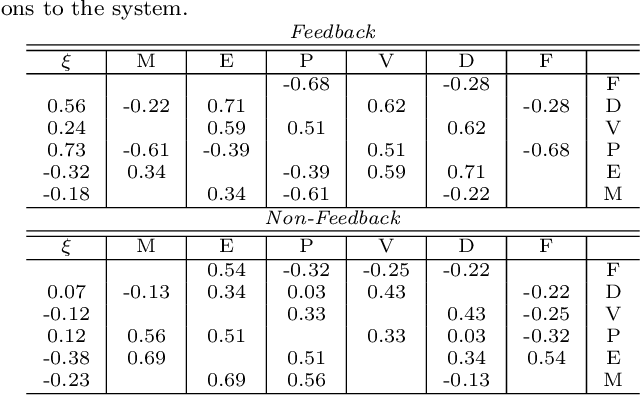

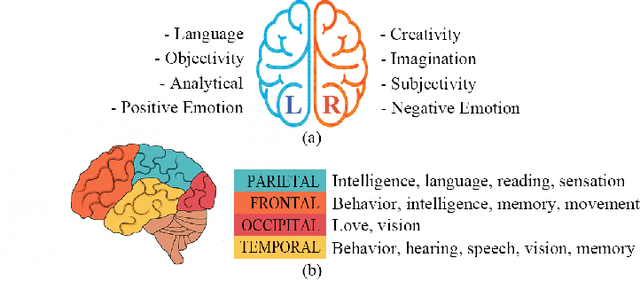

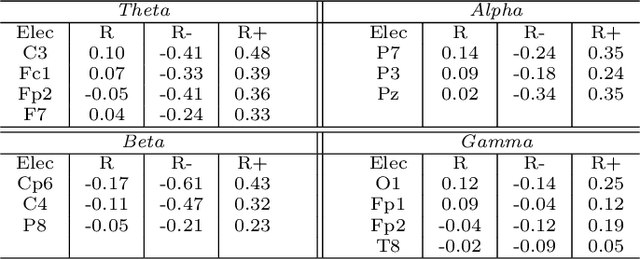

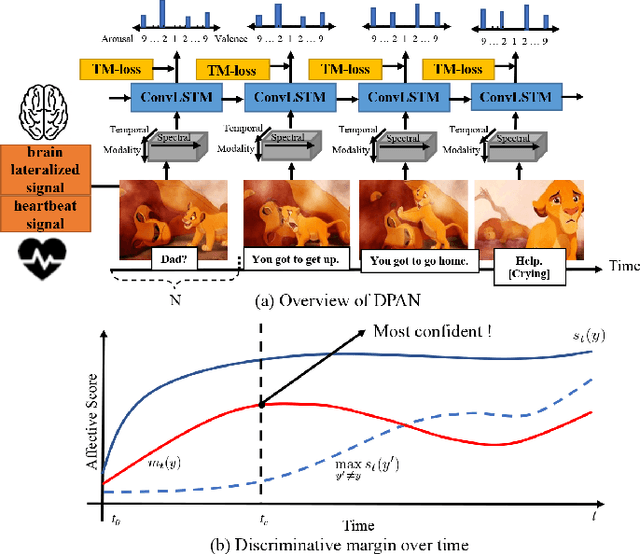

Abstract:Despite recent advances in the field of explainable artificial intelligence systems, a concrete quantitative measure for evaluating the usability of such systems is nonexistent. Ensuring the success of an explanatory interface in interacting with users requires a cyclic, symbiotic relationship between human and artificial intelligence. We, therefore, propose explanatory efficacy, a novel metric for evaluating the strength of the cyclic relationship the interface exhibits. Furthermore, in a user study, we evaluated the perceived affect and workload and recorded the EEG signals of our participants as they interacted with our custom-built, iterative explanatory interface to build personalized recommendation systems. We found that systems for perceptually driven iterative tasks with greater explanatory efficacy are characterized by statistically significant hemispheric differences in neural signals, indicating the feasibility of neural correlates as a measure of explanatory efficacy. These findings are beneficial for researchers who aim to study the circular ecosystem of the human-artificial intelligence partnership.

Wearable Affective Life-Log System for Understanding Emotion Dynamics in Daily Life

Nov 05, 2019

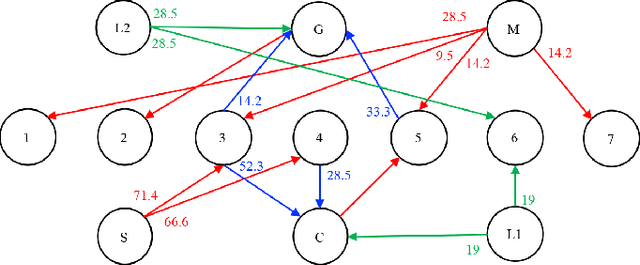

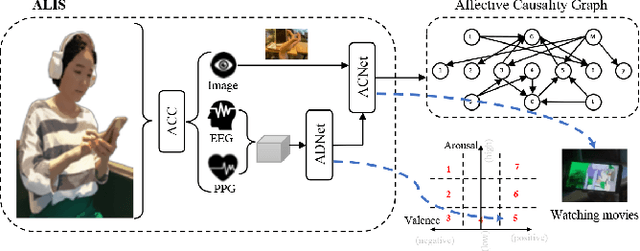

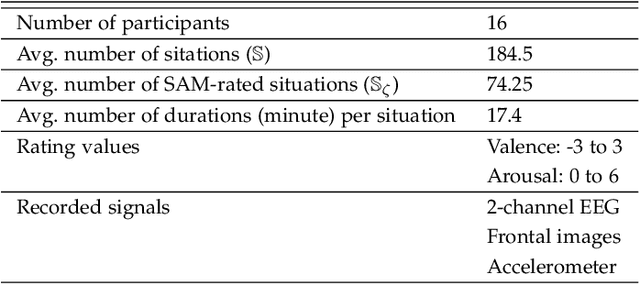

Abstract:Past research on recognizing human affect has made use of a variety of physiological sensors in many ways. Nonetheless, how affective dynamics are influenced in the context of human daily life has not yet been explored. In this work, we present a wearable affective life-log system (ALIS), that is robust as well as easy to use in daily life to detect emotional changes and determine their cause-and-effect relationship on users' lives. The proposed system records how a user feels in certain situations during long-term activities with physiological sensors. Based on the long-term monitoring, the system analyzes how the contexts of the user's life affect his/her emotion changes. Furthermore, real-world experimental results demonstrate that the proposed wearable life-log system enables us to build causal structures to find effective stress relievers suited to every stressful situation in school life.

An Affective Situation Labeling System from Psychological Behaviors in Emotion Recognition

Nov 05, 2019

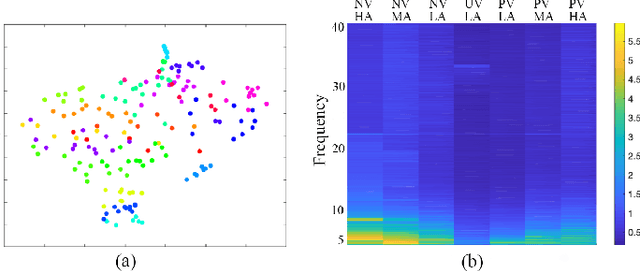

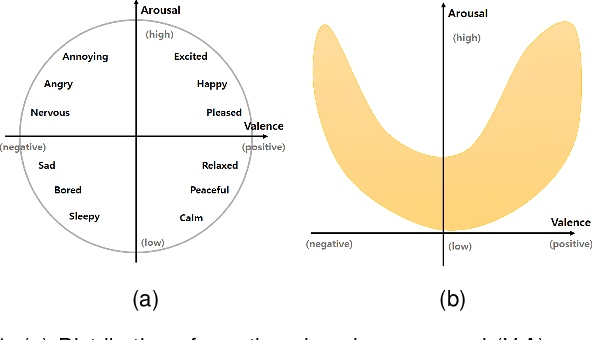

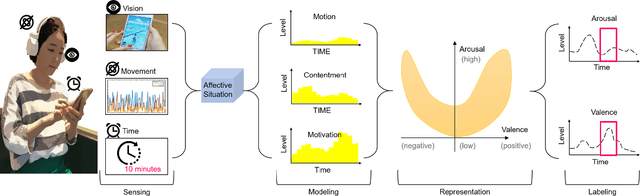

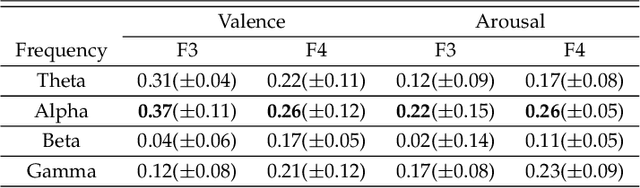

Abstract:This paper presents a computational framework for providing affective labels to real-life situations, called A-Situ. We first define an affective situation, as a specific arrangement of affective entities relevant to emotion elicitation in a situation. Then, the affective situation is represented as a set of labels in the valence-arousal emotion space. Based on physiological behaviors in response to a situation, the proposed framework quantifies the expected emotion evoked by the interaction with a stimulus event. The accumulated result in a spatiotemporal situation is represented as a polynomial curve called the affective curve, which bridges the semantic gap between cognitive and affective perception in real-world situations. We show the efficacy of the curve for reliable emotion labeling in real-world experiments, respectively concerning 1) a comparison between the results from our system and existing explicit assessments for measuring emotion, 2) physiological distinctiveness in emotional states, and 3) physiological characteristics correlated to continuous labels. The efficiency of affective curves to discriminate emotional states is evaluated through subject-dependent classification performance using bicoherence features to represent discrete affective states in the valence-arousal space. Furthermore, electroencephalography-based statistical analysis revealed the physiological correlates of the affective curves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge