Birgit Hofer

Identifying non-natural language artifacts in bug reports

Oct 04, 2021

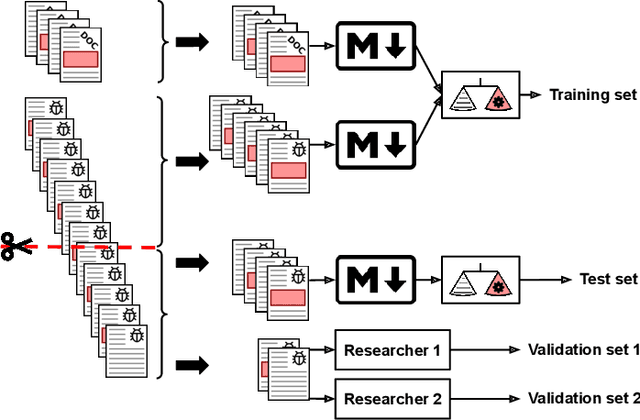

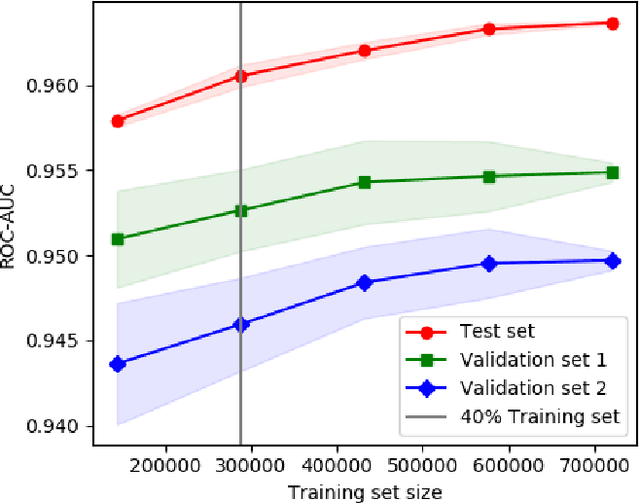

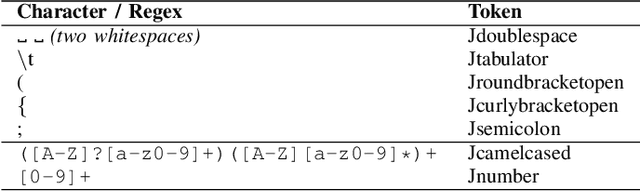

Abstract:Bug reports are a popular target for natural language processing (NLP). However, bug reports often contain artifacts such as code snippets, log outputs and stack traces. These artifacts not only inflate the bug reports with noise, but often constitute a real problem for the NLP approach at hand and have to be removed. In this paper, we present a machine learning based approach to classify content into natural language and artifacts at line level implemented in Python. We show how data from GitHub issue trackers can be used for automated training set generation, and present a custom preprocessing approach for bug reports. Our model scores at 0.95 ROC-AUC and 0.93 F1 against our manually annotated validation set, and classifies 10k lines in 0.72 seconds. We cross evaluated our model against a foreign dataset and a foreign R model for the same task. The Python implementation of our model and our datasets are made publicly available under an open source license.

Root cause prediction based on bug reports

Mar 03, 2021

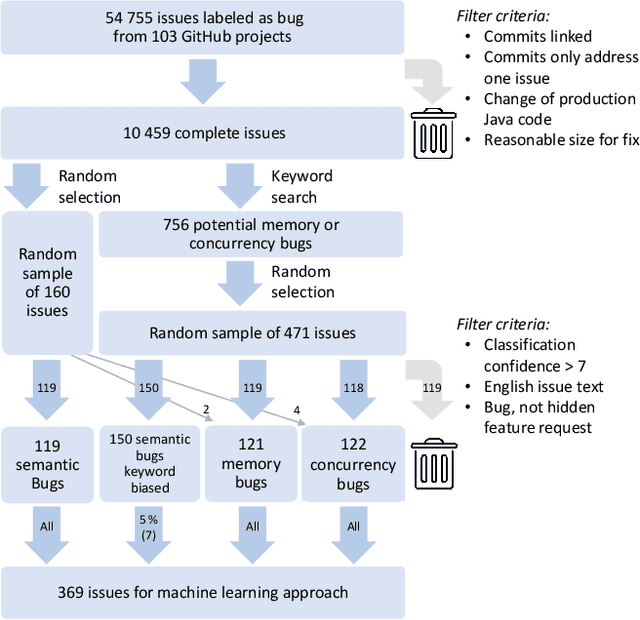

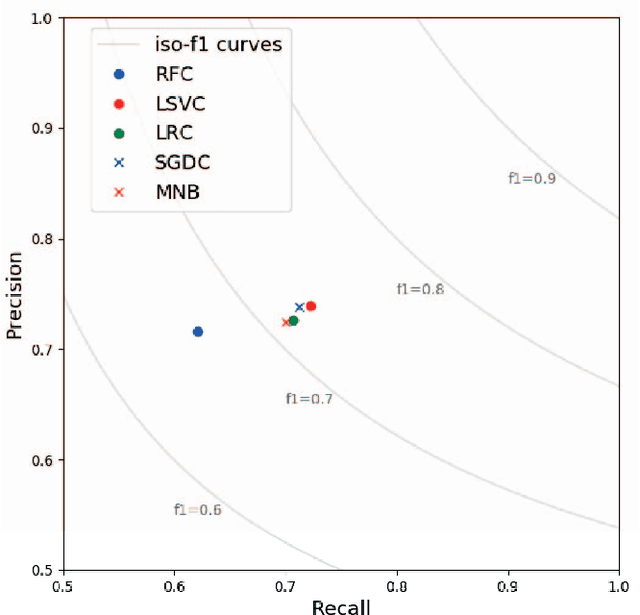

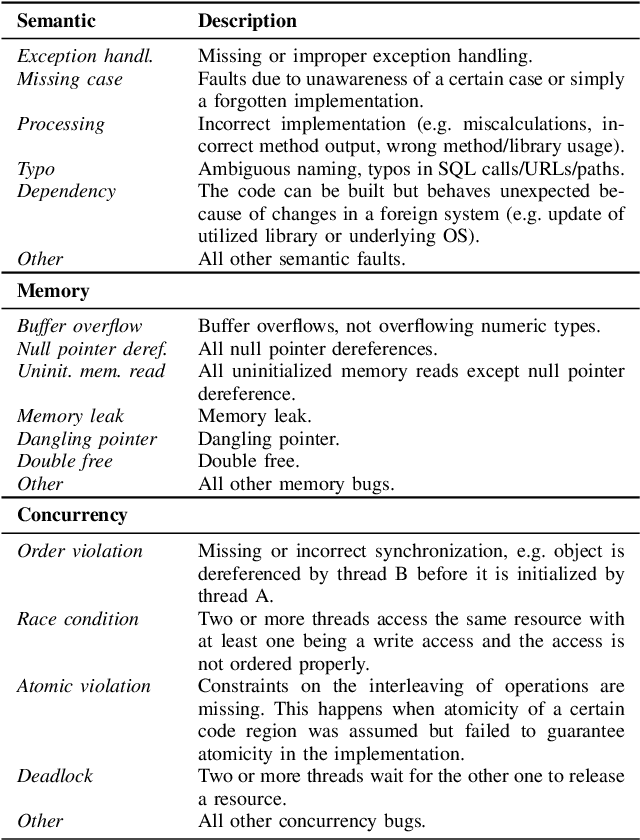

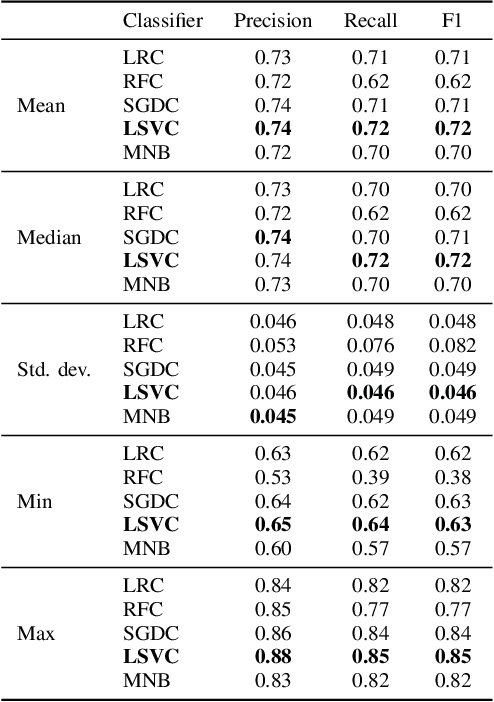

Abstract:This paper proposes a supervised machine learning approach for predicting the root cause of a given bug report. Knowing the root cause of a bug can help developers in the debugging process - either directly or indirectly by choosing proper tool support for the debugging task. We mined 54755 closed bug reports from the issue trackers of 103 GitHub projects and applied a set of heuristics to create a benchmark consisting of 10459 reports. A subset was manually classified into three groups (semantic, memory, and concurrency) based on the bugs' root causes. Since the types of root cause are not equally distributed, a combination of keyword search and random selection was applied. Our data set for the machine learning approach consists of 369 bug reports (122 concurrency, 121 memory, and 126 semantic bugs). The bug reports are used as input to a natural language processing algorithm. We evaluated the performance of several classifiers for predicting the root causes for the given bug reports. Linear Support Vector machines achieved the highest mean precision (0.74) and recall (0.72) scores. The created bug data set and classification are publicly available.

* 6 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge