Baogang Zhang

Representable Matrices: Enabling High Accuracy Analog Computation for Inference of DNNs using Memristors

Nov 27, 2019

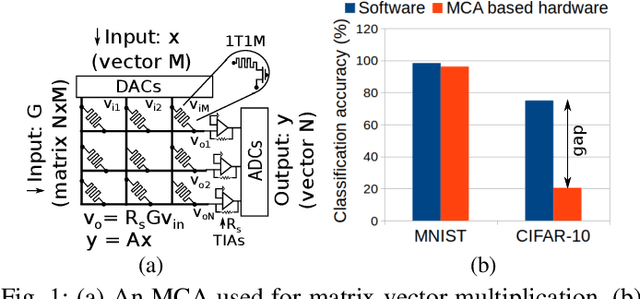

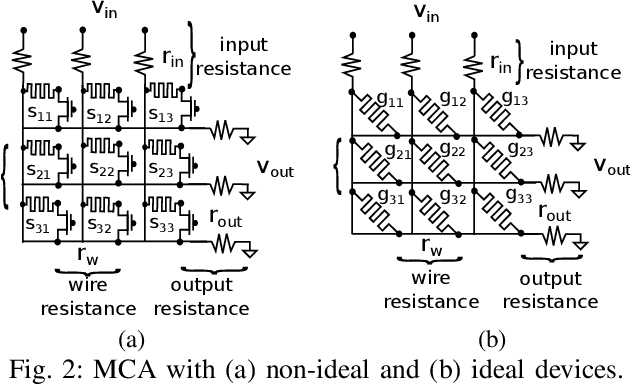

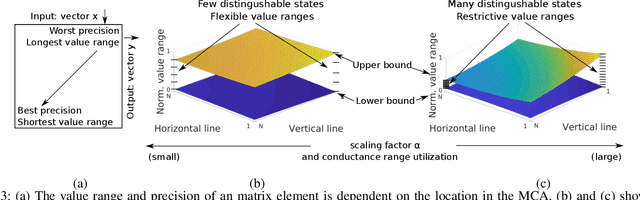

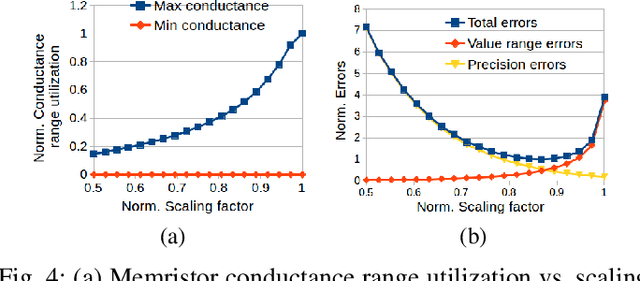

Abstract:Analog computing based on memristor technology is a promising solution to accelerating the inference phase of deep neural networks (DNNs). A fundamental problem is to map an arbitrary matrix to a memristor crossbar array (MCA) while maximizing the resulting computational accuracy. The state-of-the-art mapping technique is based on a heuristic that only guarantees to produce the correct output for two input vectors. In this paper, a technique that aims to produce the correct output for every input vector is proposed, which involves specifying the memristor conductance values and a scaling factor realized by the peripheral circuitry. The key insight of the paper is that the conductance matrix realized by an MCA is only required to be proportional to the target matrix. The selection of the scaling factor between the two regulates the utilization of the programmable memristor conductance range and the representability of the target matrix. Consequently, the scaling factor is set to balance precision and value range errors. Moreover, a technique of converting conductance values into state variables and vice versa is proposed to handle memristors with non-ideal device characteristics. Compared with the state-of-the-art technique, the proposed mapping results in 4X-9X smaller errors. The improvements translate into that the classification accuracy of a seven-layer convolutional neural network (CNN) on CIFAR-10 is improved from 20.5% to 71.8%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge