Avetik G. Karagulyan

User-friendly guarantees for the Langevin Monte Carlo with inaccurate gradient

Sep 10, 2018

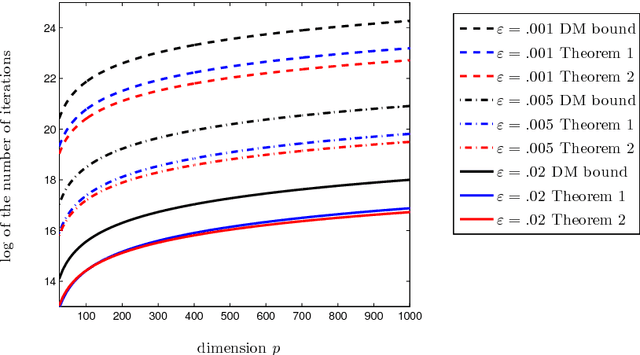

Abstract:In this paper, we study the problem of sampling from a given probability density function that is known to be smooth and strongly log-concave. We analyze several methods of approximate sampling based on discretizations of the (highly overdamped) Langevin diffusion and establish guarantees on its error measured in the Wasserstein-2 distance. Our guarantees improve or extend the state-of-the-art results in three directions. First, we provide an upper bound on the error of the first-order Langevin Monte Carlo (LMC) algorithm with optimized varying step-size. This result has the advantage of being horizon free (we do not need to know in advance the target precision) and to improve by a logarithmic factor the corresponding result for the constant step-size. Second, we study the case where accurate evaluations of the gradient of the log-density are unavailable, but one can have access to approximations of the aforementioned gradient. In such a situation, we consider both deterministic and stochastic approximations of the gradient and provide an upper bound on the sampling error of the first-order LMC that quantifies the impact of the gradient evaluation inaccuracies. Third, we establish upper bounds for two versions of the second-order LMC, which leverage the Hessian of the log-density. We nonasymptotic guarantees on the sampling error of these second-order LMCs. These guarantees reveal that the second-order LMC algorithms improve on the first-order LMC in ill-conditioned settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge