Aurora González Vidal

FLAegis: A Two-Layer Defense Framework for Federated Learning Against Poisoning Attacks

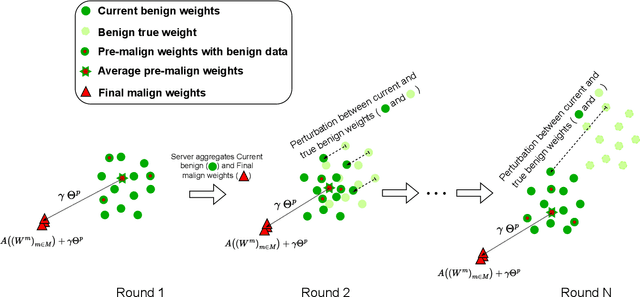

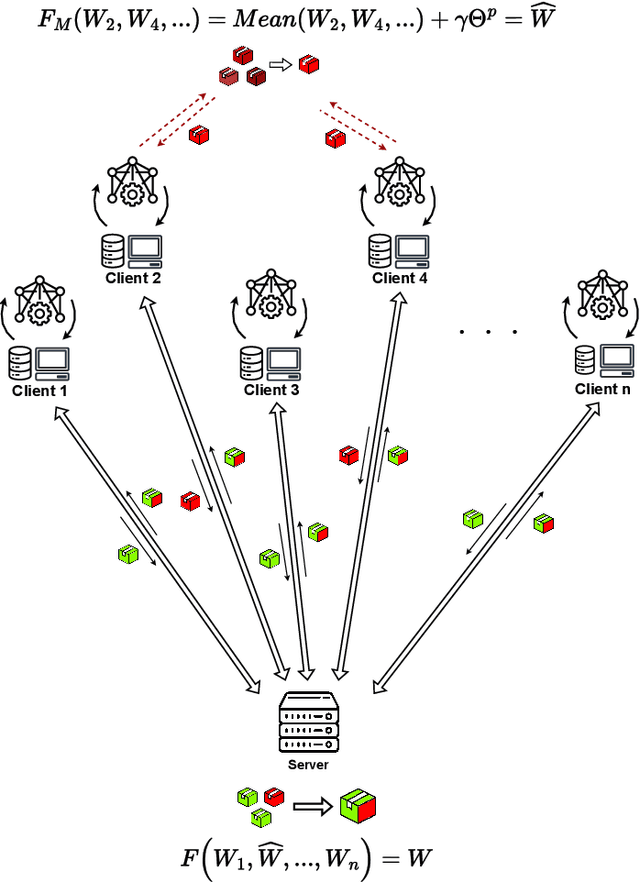

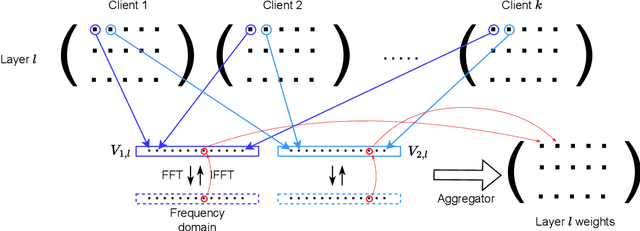

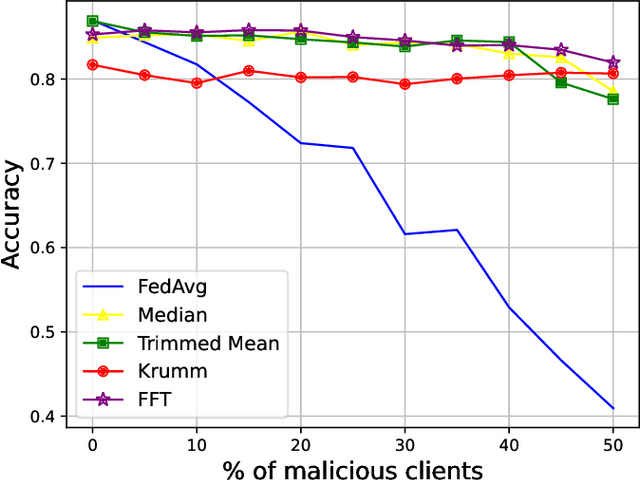

Aug 26, 2025Abstract:Federated Learning (FL) has become a powerful technique for training Machine Learning (ML) models in a decentralized manner, preserving the privacy of the training datasets involved. However, the decentralized nature of FL limits the visibility of the training process, relying heavily on the honesty of participating clients. This assumption opens the door to malicious third parties, known as Byzantine clients, which can poison the training process by submitting false model updates. Such malicious clients may engage in poisoning attacks, manipulating either the dataset or the model parameters to induce misclassification. In response, this study introduces FLAegis, a two-stage defensive framework designed to identify Byzantine clients and improve the robustness of FL systems. Our approach leverages symbolic time series transformation (SAX) to amplify the differences between benign and malicious models, and spectral clustering, which enables accurate detection of adversarial behavior. Furthermore, we incorporate a robust FFT-based aggregation function as a final layer to mitigate the impact of those Byzantine clients that manage to evade prior defenses. We rigorously evaluate our method against five poisoning attacks, ranging from simple label flipping to adaptive optimization-based strategies. Notably, our approach outperforms state-of-the-art defenses in both detection precision and final model accuracy, maintaining consistently high performance even under strong adversarial conditions.

Federated Learning for Misbehaviour Detection with Variational Autoencoders and Gaussian Mixture Models

May 16, 2024Abstract:Federated Learning (FL) has become an attractive approach to collaboratively train Machine Learning (ML) models while data sources' privacy is still preserved. However, most of existing FL approaches are based on supervised techniques, which could require resource-intensive activities and human intervention to obtain labelled datasets. Furthermore, in the scope of cyberattack detection, such techniques are not able to identify previously unknown threats. In this direction, this work proposes a novel unsupervised FL approach for the identification of potential misbehavior in vehicular environments. We leverage the computing capabilities of public cloud services for model aggregation purposes, and also as a central repository of misbehavior events, enabling cross-vehicle learning and collective defense strategies. Our solution integrates the use of Gaussian Mixture Models (GMM) and Variational Autoencoders (VAE) on the VeReMi dataset in a federated environment, where each vehicle is intended to train only with its own data. Furthermore, we use Restricted Boltzmann Machines (RBM) for pre-training purposes, and Fedplus as aggregation function to enhance model's convergence. Our approach provides better performance (more than 80 percent) compared to recent proposals, which are usually based on supervised techniques and artificial divisions of the VeReMi dataset.

FedRDF: A Robust and Dynamic Aggregation Function against Poisoning Attacks in Federated Learning

Feb 15, 2024

Abstract:Federated Learning (FL) represents a promising approach to typical privacy concerns associated with centralized Machine Learning (ML) deployments. Despite its well-known advantages, FL is vulnerable to security attacks such as Byzantine behaviors and poisoning attacks, which can significantly degrade model performance and hinder convergence. The effectiveness of existing approaches to mitigate complex attacks, such as median, trimmed mean, or Krum aggregation functions, has been only partially demonstrated in the case of specific attacks. Our study introduces a novel robust aggregation mechanism utilizing the Fourier Transform (FT), which is able to effectively handling sophisticated attacks without prior knowledge of the number of attackers. Employing this data technique, weights generated by FL clients are projected into the frequency domain to ascertain their density function, selecting the one exhibiting the highest frequency. Consequently, malicious clients' weights are excluded. Our proposed approach was tested against various model poisoning attacks, demonstrating superior performance over state-of-the-art aggregation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge