Ashutosh Hathidara

MirrorBench: An Extensible Framework to Evaluate User-Proxy Agents for Human-Likeness

Jan 13, 2026Abstract:Large language models (LLMs) are increasingly used as human simulators, both for evaluating conversational systems and for generating fine-tuning data. However, naive "act-as-a-user" prompting often yields verbose, unrealistic utterances, underscoring the need for principled evaluation of so-called user proxy agents. We present MIRRORBENCH, a reproducible, extensible benchmarking framework that evaluates user proxies solely on their ability to produce human-like user utterances across diverse conversational tasks, explicitly decoupled from downstream task success. MIRRORBENCH features a modular execution engine with typed interfaces, metadata-driven registries, multi-backend support, caching, and robust observability. The system supports pluggable user proxies, datasets, tasks, and metrics, enabling researchers to evaluate arbitrary simulators under a uniform, variance-aware harness. We include three lexical-diversity metrics (MATTR, YULE'S K, and HD-D) and three LLM-judge-based metrics (GTEval, Pairwise Indistinguishability, and Rubric-and-Reason). Across four open datasets, MIRRORBENCH yields variance-aware results and reveals systematic gaps between user proxies and real human users. The framework is open source and includes a simple command-line interface for running experiments, managing configurations and caching, and generating reports. The framework can be accessed at https://github.com/SAP/mirrorbench.

Mining Tweets to Predict Future Bitcoin Price

Dec 03, 2024

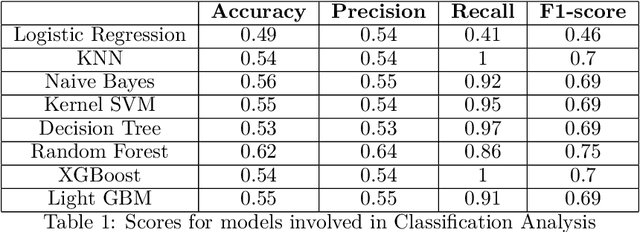

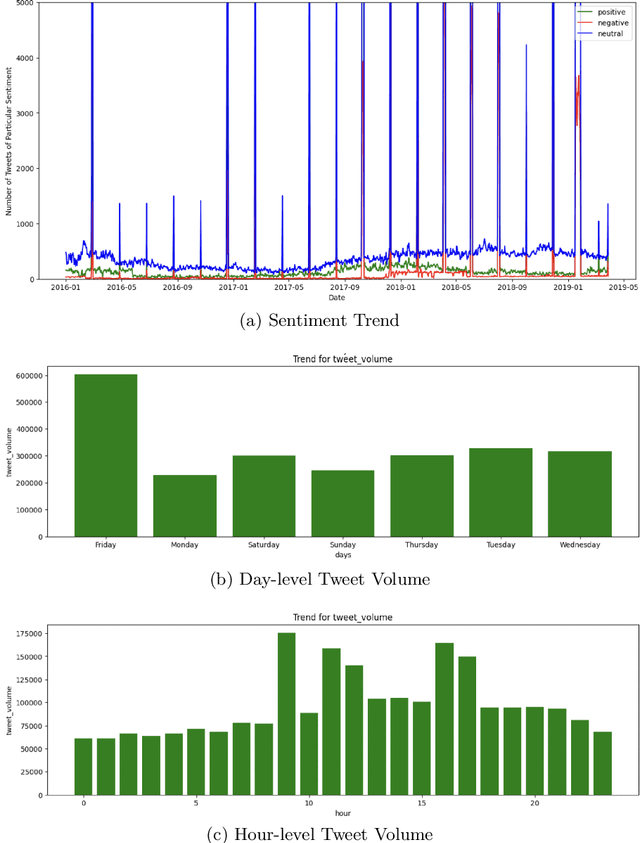

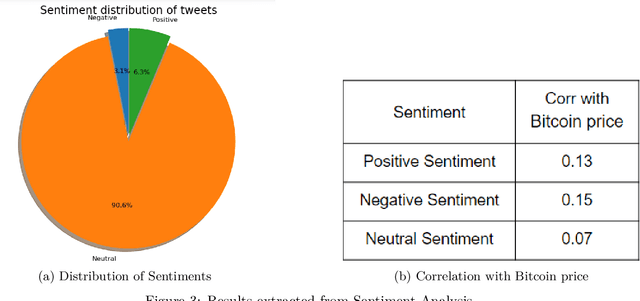

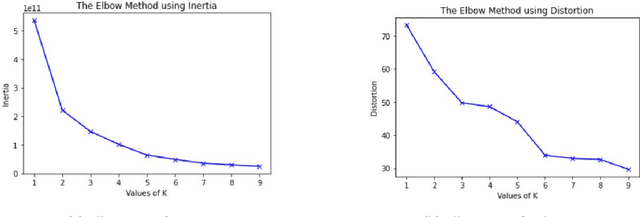

Abstract:Bitcoin has increased investment interests in people during the last decade. We have seen an increase in the number of posts on social media platforms about cryptocurrency, especially Bitcoin. This project focuses on analyzing user tweet data in combination with Bitcoin price data to see the relevance between price fluctuations and the conversation between millions of people on Twitter. This study also exploits this relationship between user tweets and bitcoin prices to predict the future bitcoin price. We are utilizing novel techniques and methods to analyze the data and make price predictions.

Implementing An Artificial Quantum Perceptron

Dec 03, 2024

Abstract:A Perceptron is a fundamental building block of a neural network. The flexibility and scalability of perceptron make it ubiquitous in building intelligent systems. Studies have shown the efficacy of a single neuron in making intelligent decisions. Here, we examined and compared two perceptrons with distinct mechanisms, and developed a quantum version of one of those perceptrons. As a part of this modeling, we implemented the quantum circuit for an artificial perception, generated a dataset, and simulated the training. Through these experiments, we show that there is an exponential growth advantage and test different qubit versions. Our findings show that this quantum model of an individual perceptron can be used as a pattern classifier. For the second type of model, we provide an understanding to design and simulate a spike-dependent quantum perceptron. Our code is available at \url{https://github.com/ashutosh1919/quantum-perceptron}

Neuro-Symbolic Sudoku Solver

Jul 02, 2023Abstract:Deep Neural Networks have achieved great success in some of the complex tasks that humans can do with ease. These include image recognition/classification, natural language processing, game playing etc. However, modern Neural Networks fail or perform poorly when trained on tasks that can be solved easily using backtracking and traditional algorithms. Therefore, we use the architecture of the Neuro Logic Machine (NLM) and extend its functionality to solve a 9X9 game of Sudoku. To expand the application of NLMs, we generate a random grid of cells from a dataset of solved games and assign up to 10 new empty cells. The goal of the game is then to find a target value ranging from 1 to 9 and fill in the remaining empty cells while maintaining a valid configuration. In our study, we showcase an NLM which is capable of obtaining 100% accuracy for solving a Sudoku with empty cells ranging from 3 to 10. The purpose of this study is to demonstrate that NLMs can also be used for solving complex problems and games like Sudoku. We also analyze the behaviour of NLMs with a backtracking algorithm by comparing the convergence time using a graph plot on the same problem. With this study we show that Neural Logic Machines can be trained on the tasks that traditional Deep Learning architectures fail using Reinforcement Learning. We also aim to propose the importance of symbolic learning in explaining the systematicity in the hybrid model of NLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge