Alexey A. Novikov

Can Text-to-Image Generative Models Accurately Depict Age? A Comparative Study on Synthetic Portrait Generation and Age Estimation

Feb 05, 2025

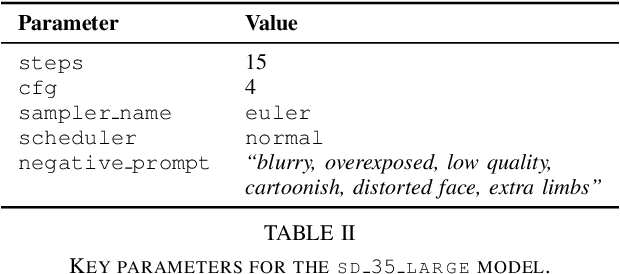

Abstract:Text-to-image generative models have shown remarkable progress in producing diverse and photorealistic outputs. In this paper, we present a comprehensive analysis of their effectiveness in creating synthetic portraits that accurately represent various demographic attributes, with a special focus on age, nationality, and gender. Our evaluation employs prompts specifying detailed profiles (e.g., Photorealistic selfie photo of a 32-year-old Canadian male), covering a broad spectrum of 212 nationalities, 30 distinct ages from 10 to 78, and balanced gender representation. We compare the generated images against ground truth age estimates from two established age estimation models to assess how faithfully age is depicted. Our findings reveal that although text-to-image models can consistently generate faces reflecting different identities, the accuracy with which they capture specific ages and do so across diverse demographic backgrounds remains highly variable. These results suggest that current synthetic data may be insufficiently reliable for high-stakes age-related tasks requiring robust precision, unless practitioners are prepared to invest in significant filtering and curation. Nevertheless, they may still be useful in less sensitive or exploratory applications, where absolute age precision is not critical.

JAM: A Comprehensive Model for Age Estimation, Verification, and Comparability

Oct 05, 2024

Abstract:This paper introduces a comprehensive model for age estimation, verification, and comparability, offering a comprehensive solution for a wide range of applications. It employs advanced learning techniques to understand age distribution and uses confidence scores to create probabilistic age ranges, enhancing its ability to handle ambiguous cases. The model has been tested on both proprietary and public datasets and compared against one of the top-performing models in the field. Additionally, it has recently been evaluated by NIST as part of the FATE challenge, achieving top places in many categories.

Fully Convolutional Architectures for Multi-Class Segmentation in Chest Radiographs

Feb 13, 2018

Abstract:The success of deep convolutional neural networks on image classification and recognition tasks has led to new applications in very diversified contexts, including the field of medical imaging. In this paper we investigate and propose neural network architectures for automated multi-class segmentation of anatomical organs in chest radiographs, namely for lungs, clavicles and heart. We address several open challenges including model overfitting, reducing number of parameters and handling of severely imbalanced data in CXR by fusing recent concepts in convolutional networks and adapting them to the segmentation problem task in CXR. We demonstrate that our architecture combining delayed subsampling, exponential linear units, highly restrictive regularization and a large number of high resolution low level abstract features outperforms state-of-the-art methods on all considered organs, as well as the human observer on lungs and heart. The models use a multi-class configuration with three target classes and are trained and tested on the publicly available JSRT database, consisting of 247 X-ray images the ground-truth masks for which are available in the SCR database. Our best performing model, trained with the loss function based on the Dice coefficient, reached mean Jaccard overlap scores of 95.0\% for lungs, 86.8\% for clavicles and 88.2\% for heart. This architecture outperformed the human observer results for lungs and heart.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge