Abdurrahman Gumus

AutoReason: Automatic Few-Shot Reasoning Decomposition

Dec 09, 2024Abstract:Chain of Thought (CoT) was introduced in recent research as a method for improving step-by-step reasoning in Large Language Models. However, CoT has limited applications such as its need for hand-crafted few-shot exemplar prompts and no capability to adjust itself to different queries. In this work, we propose a system to automatically generate rationales using CoT. Our method improves multi-step implicit reasoning capabilities by decomposing the implicit query into several explicit questions. This provides interpretability for the model, improving reasoning in weaker LLMs. We test our approach with two Q\&A datasets: StrategyQA and HotpotQA. We show an increase in accuracy with both, especially on StrategyQA. To facilitate further research in this field, the complete source code for this study has been made publicly available on GitHub: https://github.com/miralab-ai/autoreason.

Real-Time Superficial Vein Imaging System for Observing Abnormalities on Vascular Structures

Apr 29, 2023Abstract:Circulatory system abnormalities might be an indicator of diseases or tissue damage. Early detection of vascular abnormalities might have an important role during treatment and also raise the patient's awarenes. Current detection methods for vascular imaging are high-cost, invasive, and mostly radiation-based. In this study, a low-cost and portable microcomputer-based tool has been developed as a near-infrared (NIR) superficial vascular imaging device. The device uses NIR light-emitting diode (LED) light at 850 nm along with other electronic and optical components. It operates as a non-contact and safe infrared (IR) imaging method in real-time. Image and video analysis are carried out using OpenCV (Open-Source Computer Vision), a library of programming functions mainly used in computer vision. Various tests were carried out to optimize the imaging system and set up a suitable external environment. To test the performance of the device, the images taken from three diabetic volunteers, who are expected to have abnormalities in the vascular structure due to the possibility of deformation caused by high glucose levels in the blood, were compared with the images taken from two non-diabetic volunteers. As a result, tortuosity was observed successfully in the superficial vascular structures, where the results need to be interpreted by the medical experts in the field to understand the underlying reasons. Although this study is an engineering study and does not have an intention to diagnose any diseases, the developed system here might assist healthcare personnel in early diagnosis and treatment follow-up for vascular structures and may enable further opportunities.

Deep reproductive feature generation framework for the diagnosis of COVID-19 and viral pneumonia using chest X-ray images

Apr 20, 2023

Abstract:The rapid and accurate detection of COVID-19 cases is critical for timely treatment and preventing the spread of the disease. In this study, a two-stage feature extraction framework using eight state-of-the-art pre-trained deep Convolutional Neural Networks (CNNs) and an autoencoder is proposed to determine the health conditions of patients (COVID-19, Normal, Viral Pneumonia) based on chest X-rays. The X-ray scans are divided into four equally sized sections and analyzed by deep pre-trained CNNs. Subsequently, an autoencoder with three hidden layers is trained to extract reproductive features from the concatenated ouput of CNNs. To evaluate the performance of the proposed framework, three different classifiers, which are single-layer perceptron (SLP), multi-layer perceptron (MLP), and support vector machine (SVM) are used. Furthermore, the deep CNN architectures are used to create benchmark models and trained on the same dataset for comparision. The proposed framework outperforms other frameworks wih pre-trained feature extractors in binary classification and shows competitive results in three-class classification. The proposed methodology is task-independent and suitable for addressing various problems. The results show that the discriminative features are a subset of the reproductive features, suggesting that extracting task-independent features is superior to the extraction only task-based features. The flexibility and task-independence of the reproductive features make the conceptive information approach more favorable. The proposed methodology is novel and shows promising results for analyzing medical image data.

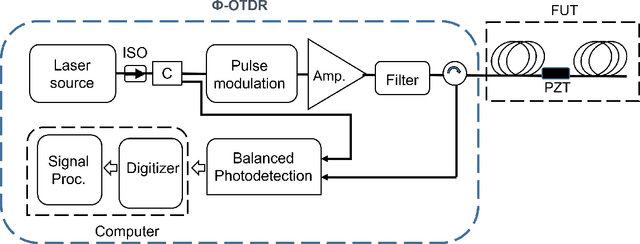

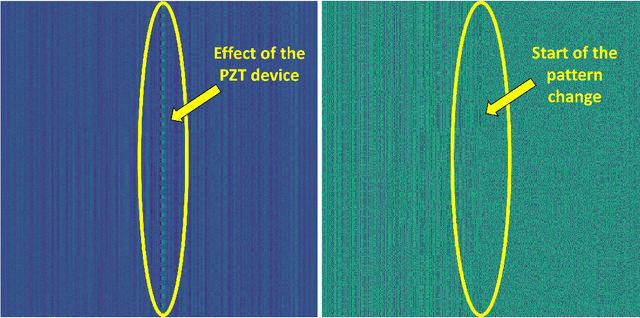

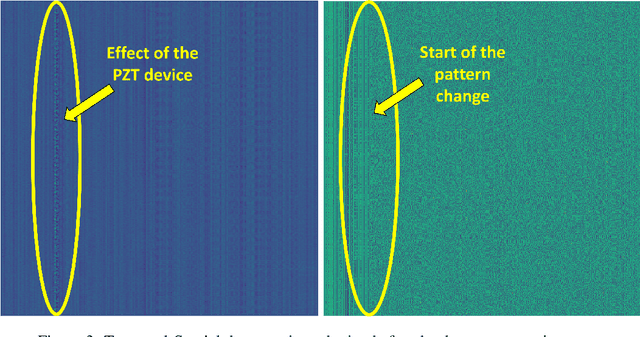

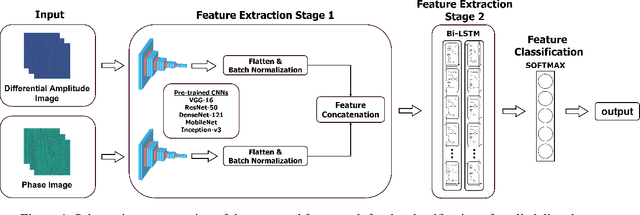

A Novel Approach For Analysis of Distributed Acoustic Sensing System Based on Deep Transfer Learning

Jun 24, 2022

Abstract:Distributed acoustic sensors (DAS) are effective apparatus which are widely used in many application areas for recording signals of various events with very high spatial resolution along the optical fiber. To detect and recognize the recorded events properly, advanced signal processing algorithms with high computational demands are crucial. Convolutional neural networks are highly capable tools for extracting spatial information and very suitable for event recognition applications in DAS. Long-short term memory (LSTM) is an effective instrument for processing sequential data. In this study, we proposed a multi-input multi-output, two stage feature extraction methodology that combines the capabilities of these neural network architectures with transfer learning to classify vibrations applied to an optical fiber by a piezo transducer. First, we extracted the differential amplitude and phase information from the Phase-OTDR recordings and stored them in a temporal-spatial data matrix. Then, we used a state-of-the-art pre-trained CNN without dense layers as a feature extractor in the first stage. In the second stage, we used LSTMs to further analyze the features extracted by the CNN. Finally, we used a dense layer to classify the extracted features. To observe the effect of the utilized CNN architecture, we tested our model with five state-of-the art pre-trained models (VGG-16, ResNet-50, DenseNet-121, MobileNet and Inception-v3). The results show that using the VGG-16 architecture in our framework manages to obtain 100% classification accuracy in 50 trainings and got the best results on our Phase-OTDR dataset. Outcomes of this study indicate that the pre-trained CNNs combined with LSTM are very suitable for the analysis of differential amplitude and phase information, represented in a temporal spatial data matrix which is promising for event recognition operations in DAS applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge