Using Machine Learning to Augment Coarse-Grid Computational Fluid Dynamics Simulations

Paper and Code

Oct 03, 2020

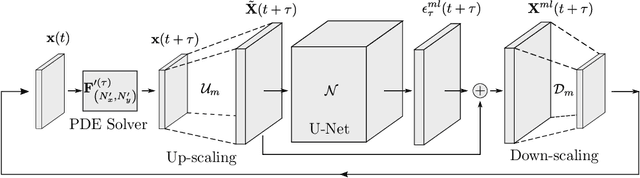

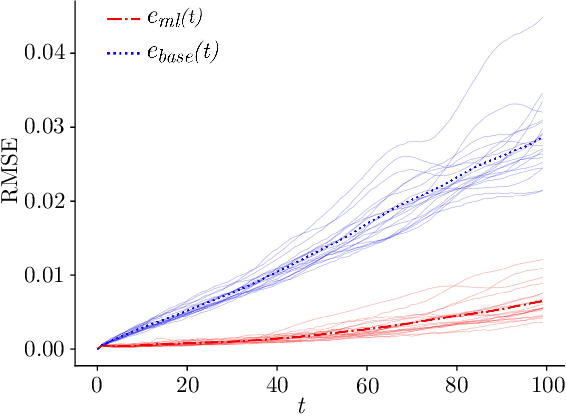

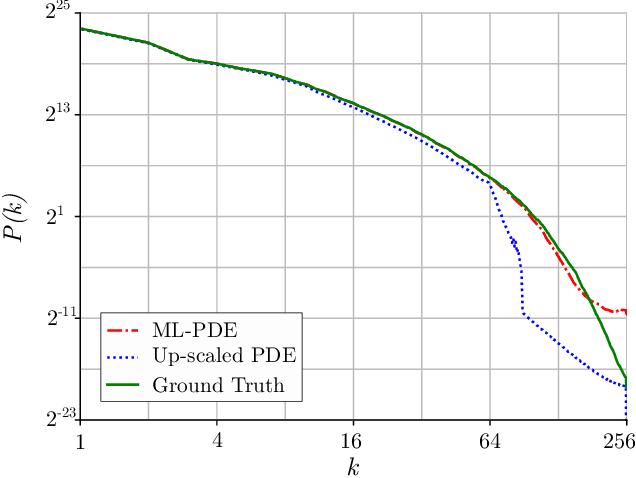

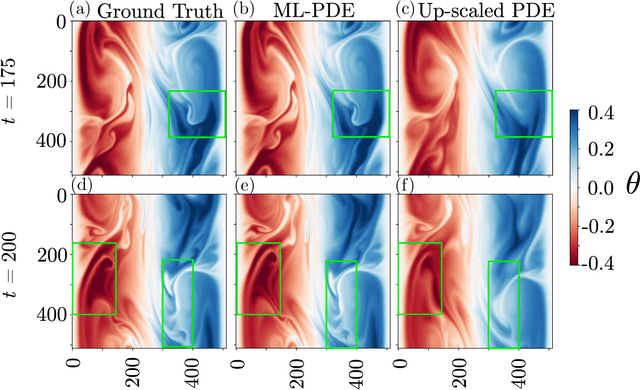

Simulation of turbulent flows at high Reynolds number is a computationally challenging task relevant to a large number of engineering and scientific applications in diverse fields such as climate science, aerodynamics, and combustion. Turbulent flows are typically modeled by the Navier-Stokes equations. Direct Numerical Simulation (DNS) of the Navier-Stokes equations with sufficient numerical resolution to capture all the relevant scales of the turbulent motions can be prohibitively expensive. Simulation at lower-resolution on a coarse-grid introduces significant errors. We introduce a machine learning (ML) technique based on a deep neural network architecture that corrects the numerical errors induced by a coarse-grid simulation of turbulent flows at high-Reynolds numbers, while simultaneously recovering an estimate of the high-resolution fields. Our proposed simulation strategy is a hybrid ML-PDE solver that is capable of obtaining a meaningful high-resolution solution trajectory while solving the system PDE at a lower resolution. The approach has the potential to dramatically reduce the expense of turbulent flow simulations. As a proof-of-concept, we demonstrate our ML-PDE strategy on a two-dimensional turbulent (Rayleigh Number $Ra=10^9$) Rayleigh-B\'enard Convection (RBC) problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge