Understand Watchdogs: Discover How Game Bot Get Discovered

Paper and Code

Nov 26, 2020

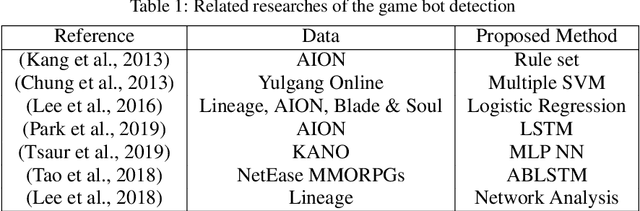

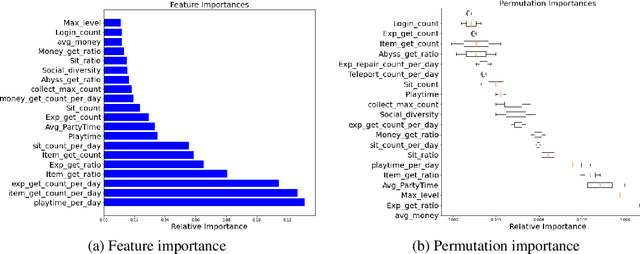

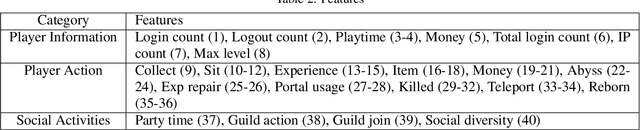

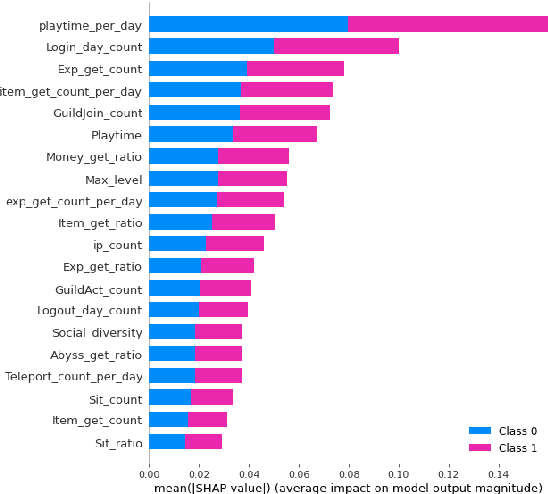

The game industry has long been troubled by malicious activities utilizing game bots. The game bots disturb other game players and destroy the environmental system of the games. For these reasons, the game industry put their best efforts to detect the game bots among players' characters using the learning-based detections. However, one problem with the detection methodologies is that they do not provide rational explanations about their decisions. To resolve this problem, in this work, we investigate the explainabilities of the game bot detection. We develop the XAI model using a dataset from the Korean MMORPG, AION, which includes game logs of human players and game bots. More than one classification model has been applied to the dataset to be analyzed by applying interpretable models. This provides us explanations about the game bots' behavior, and the truthfulness of the explanations has been evaluated. Besides, interpretability contributes to minimizing false detection, which imposes unfair restrictions on human players.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge