Uncertainty-based Modulation for Lifelong Learning

Paper and Code

Jan 27, 2020

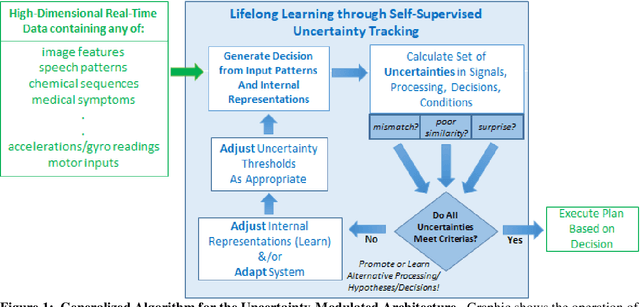

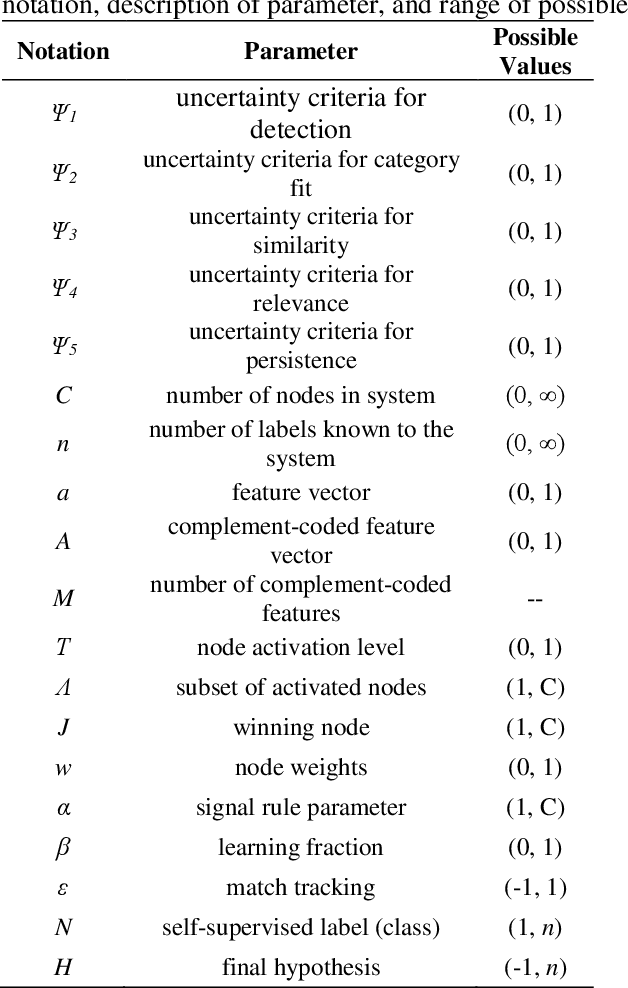

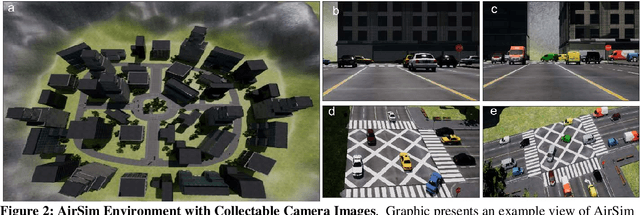

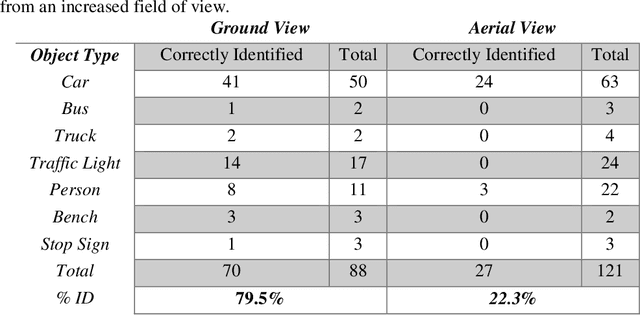

The creation of machine learning algorithms for intelligent agents capable of continuous, lifelong learning is a critical objective for algorithms being deployed on real-life systems in dynamic environments. Here we present an algorithm inspired by neuromodulatory mechanisms in the human brain that integrates and expands upon Stephen Grossberg\'s ground-breaking Adaptive Resonance Theory proposals. Specifically, it builds on the concept of uncertainty, and employs a series of neuromodulatory mechanisms to enable continuous learning, including self-supervised and one-shot learning. Algorithm components were evaluated in a series of benchmark experiments that demonstrate stable learning without catastrophic forgetting. We also demonstrate the critical role of developing these systems in a closed-loop manner where the environment and the agent\'s behaviors constrain and guide the learning process. To this end, we integrated the algorithm into an embodied simulated drone agent. The experiments show that the algorithm is capable of continuous learning of new tasks and under changed conditions with high classification accuracy (greater than 94 percent) in a virtual environment, without catastrophic forgetting. The algorithm accepts high dimensional inputs from any state-of-the-art detection and feature extraction algorithms, making it a flexible addition to existing systems. We also describe future development efforts focused on imbuing the algorithm with mechanisms to seek out new knowledge as well as employ a broader range of neuromodulatory processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge