Theoretical Modeling of Communication Dynamics

Paper and Code

Jun 09, 2021

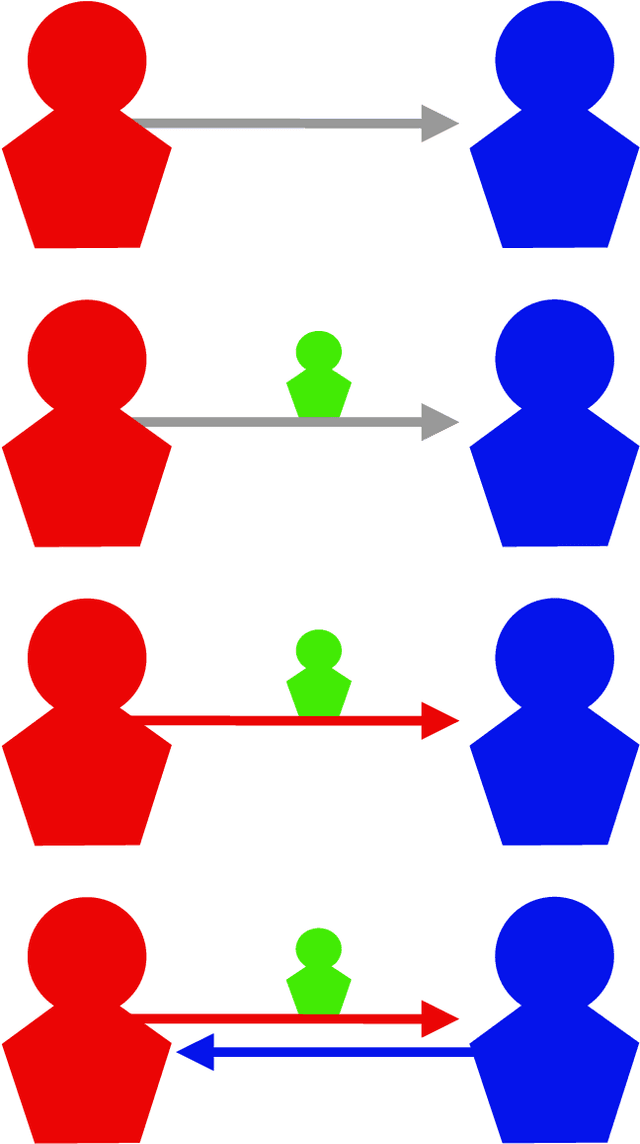

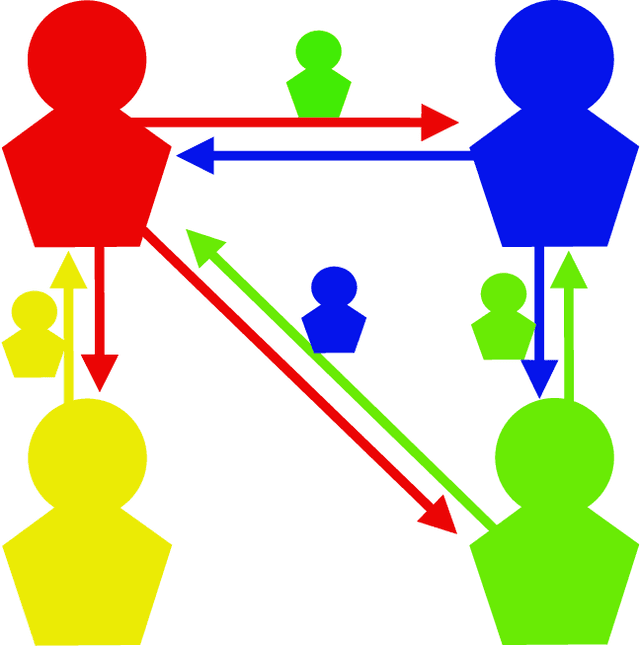

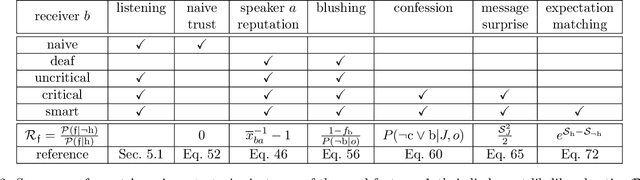

Communication is a cornerstone of social interactions, be it with human or artificial intelligence (AI). Yet it can be harmful, depending on the honesty of the exchanged information. To study this, an agent based sociological simulation framework is presented, the reputation game. This illustrates the impact of different communication strategies on the agents' reputation. The game focuses on the trustworthiness of the participating agents, their honesty as perceived by others. In the game, each agent exchanges statements with the others about their own and each other's honesty, which lets their judgments evolve. Various sender and receiver strategies are studied, like sycophant, egocentricity, pathological lying, and aggressiveness for senders as well as awareness and lack thereof for receivers. Minimalist malicious strategies are identified, like being manipulative, dominant, or destructive, which significantly increase reputation at others' costs. Phenomena such as echo chambers, self-deception, deception symbiosis, clique formation, freezing of group opinions emerge from the dynamics. This indicates that the reputation game can be studied for complex group phenomena, to test behavioral hypothesis, and to analyze AI influenced social media. With refined rules it may help to understand social interactions, and to safeguard the design of non-abusive AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge