The Viterbi process, decay-convexity and parallelized maximum a-posteriori estimation

Paper and Code

Oct 11, 2018

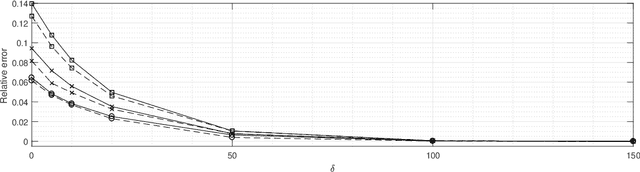

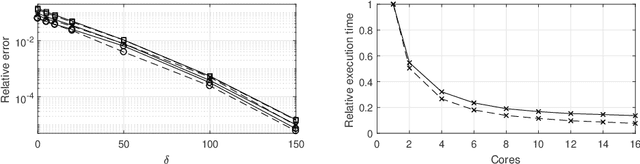

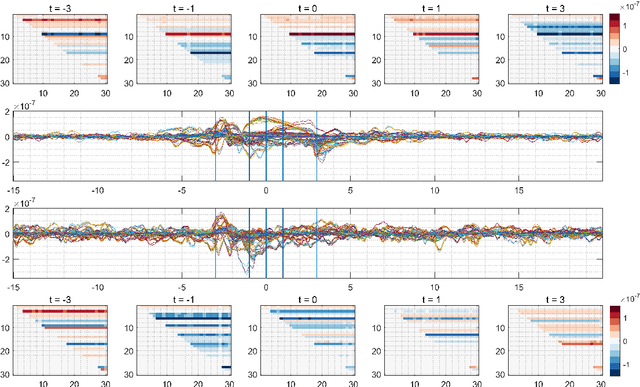

The Viterbi process is the limiting maximum a-posteriori estimate of the unobserved path in a hidden Markov model as the length of the time horizon grows. The existence of such a process suggests that approximate estimation using optimization algorithms which process data segments in parallel may be accurate. For models on state-space $\mathbb{R}^{d}$ satisfying a new "decay-convexity" condition, we approach the existence of the Viterbi process through fixed points of ordinary differential equations in a certain infinite dimensional Hilbert space. Quantitative bounds on the distance to the Viterbi process show that approximate estimation via parallelization can indeed be accurate and scaleable to high-dimensional problems because the rate of convergence to the Viterbi process does not necessarily depend on $d$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge