The Principle of Uncertain Maximum Entropy

Paper and Code

May 17, 2023

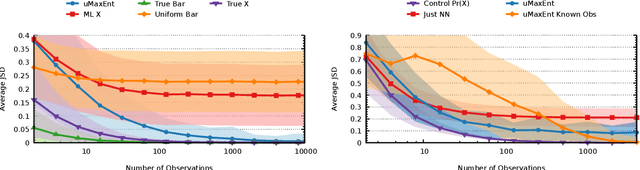

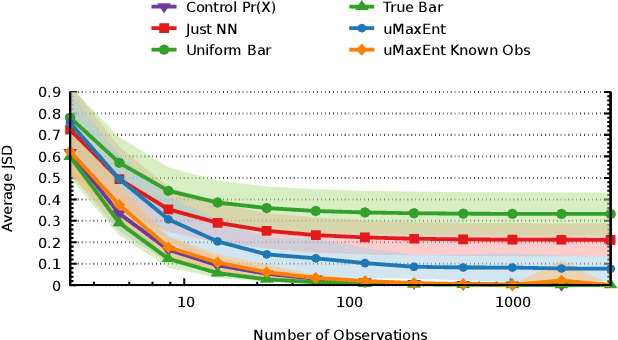

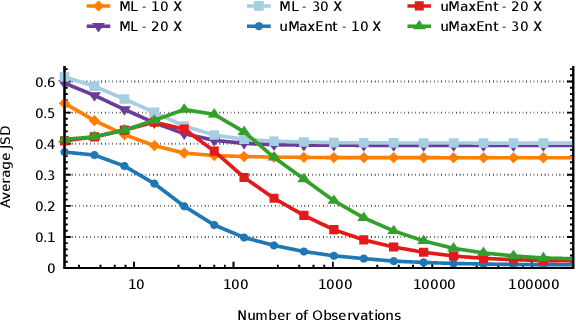

The principle of maximum entropy, as introduced by Jaynes in information theory, has contributed to advancements in various domains such as Statistical Mechanics, Machine Learning, and Ecology. Its resultant solutions have served as a catalyst, facilitating researchers in mapping their empirical observations to the acquisition of unbiased models, whilst deepening the understanding of complex systems and phenomena. However, when we consider situations in which the model elements are not directly observable, such as when noise or ocular occlusion is present, possibilities arise for which standard maximum entropy approaches may fail, as they are unable to match feature constraints. Here we show the Principle of Uncertain Maximum Entropy as a method that both encodes all available information in spite of arbitrarily noisy observations while surpassing the accuracy of some ad-hoc methods. Additionally, we utilize the output of a black-box machine learning model as input into an uncertain maximum entropy model, resulting in a novel approach for scenarios where the observation function is unavailable. Previous remedies either relaxed feature constraints when accounting for observation error, given well-characterized errors such as zero-mean Gaussian, or chose to simply select the most likely model element given an observation. We anticipate our principle finding broad applications in diverse fields due to generalizing the traditional maximum entropy method with the ability to utilize uncertain observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge