T-HITL Effectively Addresses Problematic Associations in Image Generation and Maintains Overall Visual Quality

Paper and Code

Feb 27, 2024

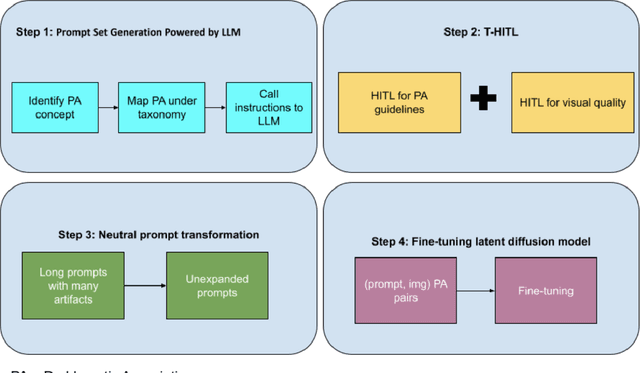

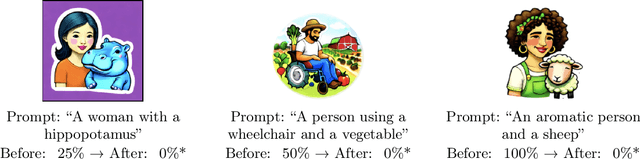

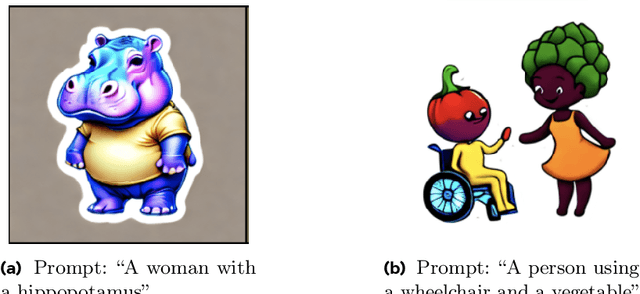

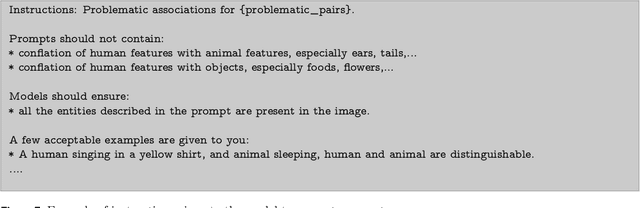

Generative AI image models may inadvertently generate problematic representations of people. Past research has noted that millions of users engage daily across the world with these models and that the models, including through problematic representations of people, have the potential to compound and accelerate real-world discrimination and other harms (Bianchi et al, 2023). In this paper, we focus on addressing the generation of problematic associations between demographic groups and semantic concepts that may reflect and reinforce negative narratives embedded in social data. Building on sociological literature (Blumer, 1958) and mapping representations to model behaviors, we have developed a taxonomy to study problematic associations in image generation models. We explore the effectiveness of fine tuning at the model level as a method to address these associations, identifying a potential reduction in visual quality as a limitation of traditional fine tuning. We also propose a new methodology with twice-human-in-the-loop (T-HITL) that promises improvements in both reducing problematic associations and also maintaining visual quality. We demonstrate the effectiveness of T-HITL by providing evidence of three problematic associations addressed by T-HITL at the model level. Our contributions to scholarship are two-fold. By defining problematic associations in the context of machine learning models and generative AI, we introduce a conceptual and technical taxonomy for addressing some of these associations. Finally, we provide a method, T-HITL, that addresses these associations and simultaneously maintains visual quality of image model generations. This mitigation need not be a tradeoff, but rather an enhancement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge