Start Small: Training Game Level Generators from Nothing by Learning at Multiple Sizes

Paper and Code

Sep 29, 2022

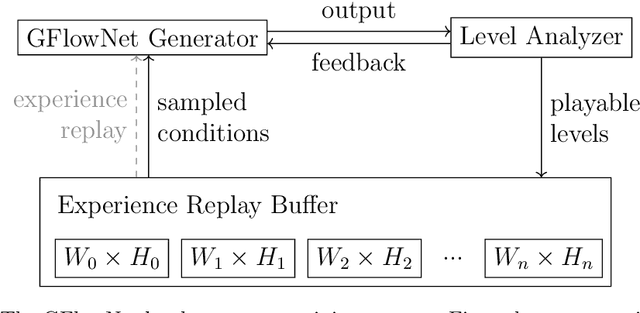

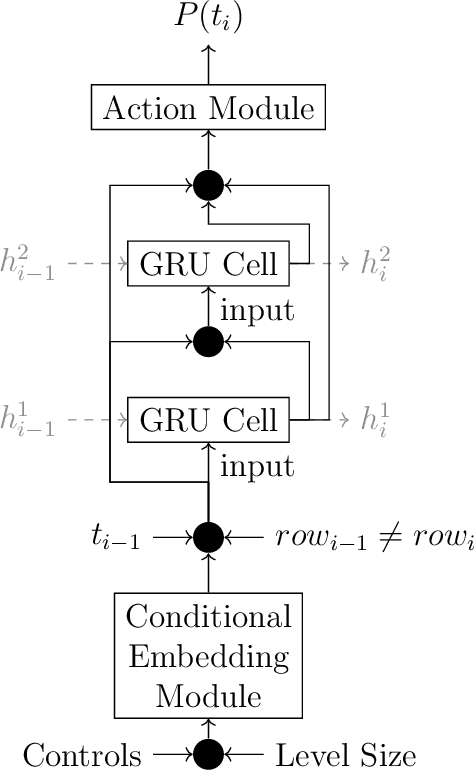

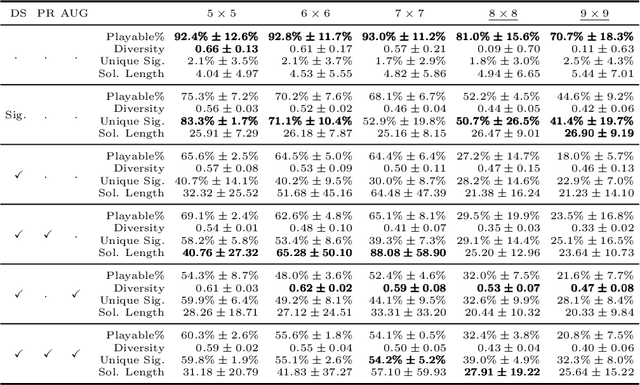

A procedural level generator is a tool that generates levels from noise. One approach to build generators is using machine learning, but given the training data rarity, multiple methods have been proposed to train generators from nothing. However, level generation tasks tend to have sparse feedback, which is commonly mitigated using game-specific supplemental rewards. This paper proposes a novel approach to train generators from nothing by learning at multiple level sizes starting from a small size up to the desired sizes. This approach employs the observed phenomenon that feedback is denser at smaller sizes to avoid supplemental rewards. It also presents the benefit of training generators to output levels at various sizes. We apply this approach to train controllable generators using generative flow networks. We also modify diversity sampling to be compatible with generative flow networks and to expand the expressive range. The results show that our methods can generate high-quality diverse levels for Sokoban, Zelda and Danger Dave for a variety of sizes, after only 3h 29min up to 6h 11min (depending on the game) of training on a single commodity machine. Also, the results show that our generators can output levels for sizes that were unavailable during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge