Sparse Image based Navigation Architecture to Mitigate the need of precise Localization in Mobile Robots

Paper and Code

Mar 29, 2022

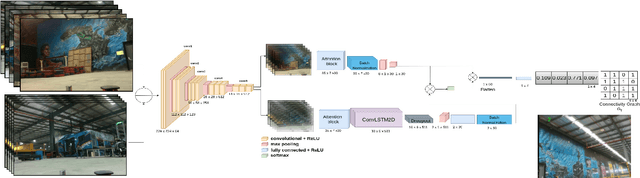

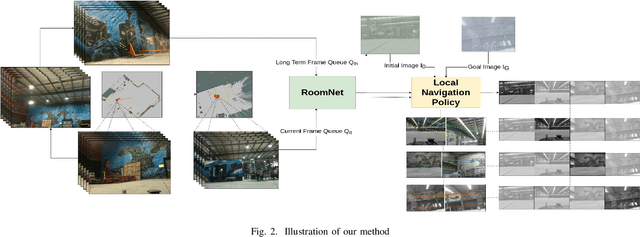

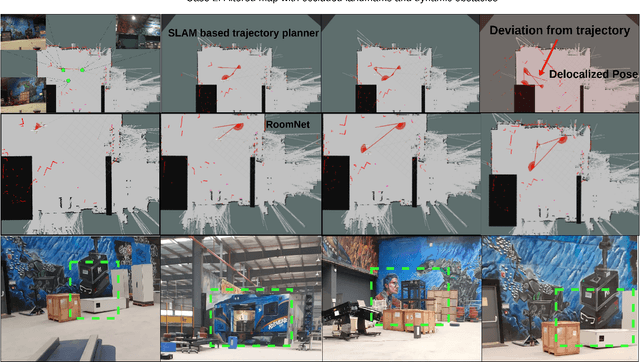

Traditional simultaneous localization and mapping (SLAM) methods focus on improvement in the robot's localization under environment and sensor uncertainty. This paper, however, focuses on mitigating the need for exact localization of a mobile robot to pursue autonomous navigation using a sparse set of images. The proposed method consists of a model architecture - RoomNet, for unsupervised learning resulting in a coarse identification of the environment and a separate local navigation policy for local identification and navigation. The former learns and predicts the scene based on the short term image sequences seen by the robot along with the transition image scenarios using long term image sequences. The latter uses sparse image matching to characterise the similarity of frames achieved vis-a-vis the frames viewed by the robot during the mapping and training stage. A sparse graph of the image sequence is created which is then used to carry out robust navigation purely on the basis of visual goals. The proposed approach is evaluated on two robots in a test environment and demonstrates the ability to navigate in dynamic environments where landmarks are obscured and classical localization methods fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge