Source Generator Attribution via Inversion

Paper and Code

May 06, 2019

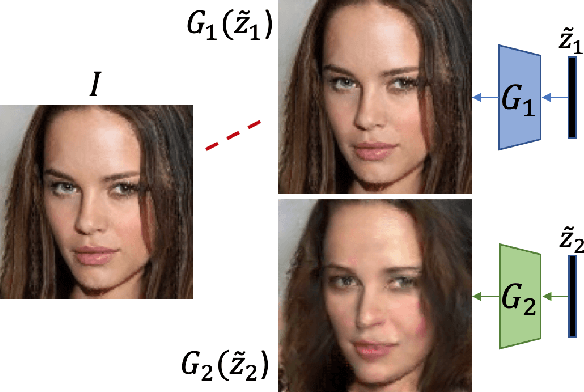

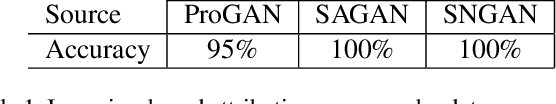

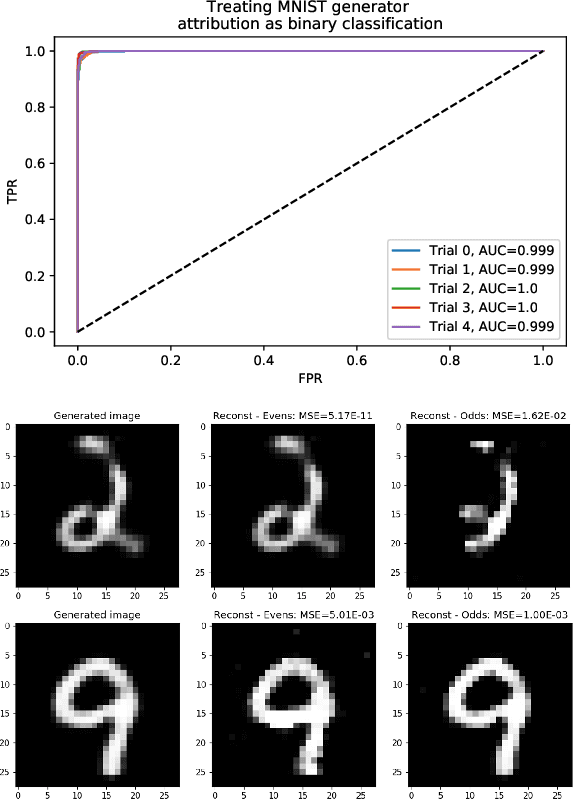

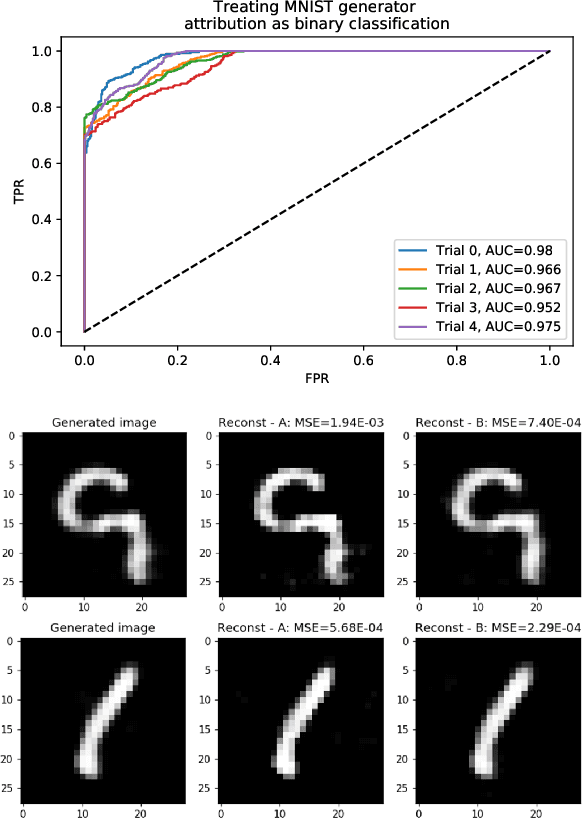

With advances in Generative Adversarial Networks (GANs) leading to dramatically-improved synthetic images and video, there is an increased need for algorithms which extend traditional forensics to this new category of imagery. While GANs have been shown to be helpful in a number of computer vision applications, there are other problematic uses such as `deep fakes' which necessitate such forensics. Source camera attribution algorithms using various cues have addressed this need for imagery captured by a camera, but there are fewer options for synthetic imagery. We address the problem of attributing a synthetic image to a specific generator in a white box setting, by inverting the process of generation. This enables us to simultaneously determine whether the generator produced the image and recover an input which produces a close match to the synthetic image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge