SoK: Machine Learning with Confidential Computing

Paper and Code

Aug 22, 2022

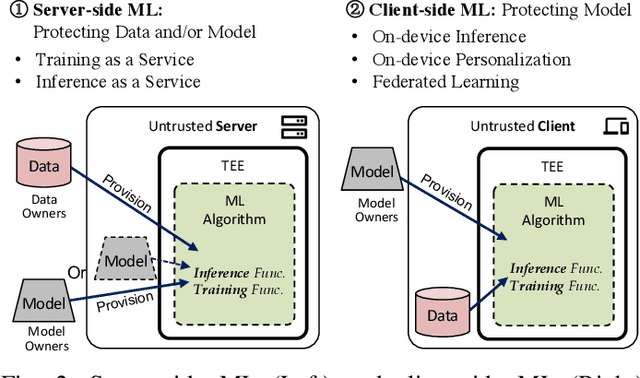

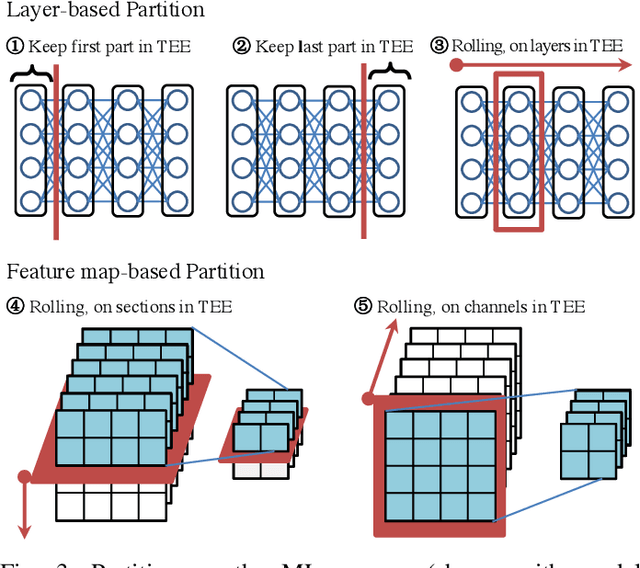

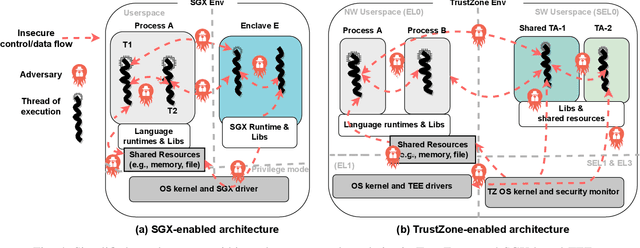

Privacy and security challenges in Machine Learning (ML) have become a critical topic to address, along with ML's pervasive development and the recent demonstration of large attack surfaces. As a mature system-oriented approach, confidential computing has been increasingly utilized in both academia and industry to improve privacy and security in various ML scenarios. In this paper, we systematize the findings on confidential computing-assisted ML security and privacy techniques for providing i) confidentiality guarantees and ii) integrity assurances. We further identify key challenges and provide dedicated analyses of the limitations in existing Trusted Execution Environment (TEE) systems for ML use cases. We discuss prospective works, including grounded privacy definitions, partitioned ML executions, dedicated TEE designs for ML, TEE-aware ML, and ML full pipeline guarantee. These potential solutions can help achieve a much strong TEE-enabled ML for privacy guarantees without introducing computation and system costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge