Skip Training for Multi-Agent Reinforcement Learning Controller for Industrial Wave Energy Converters

Paper and Code

Sep 13, 2022

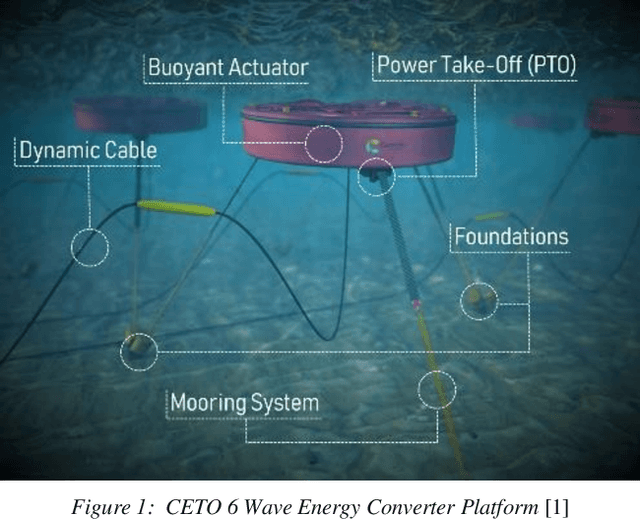

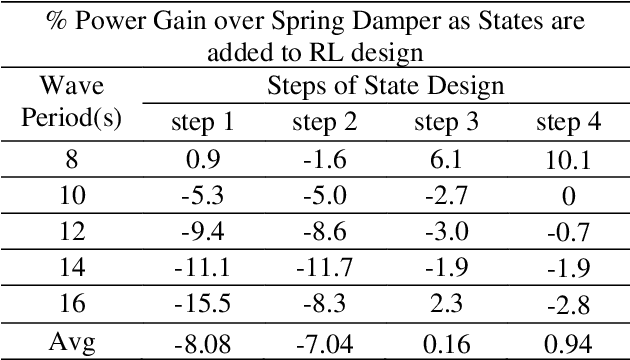

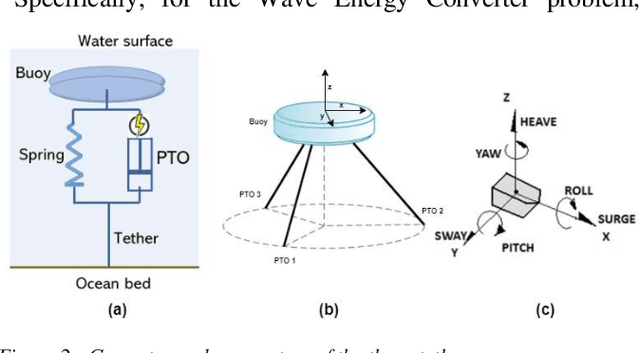

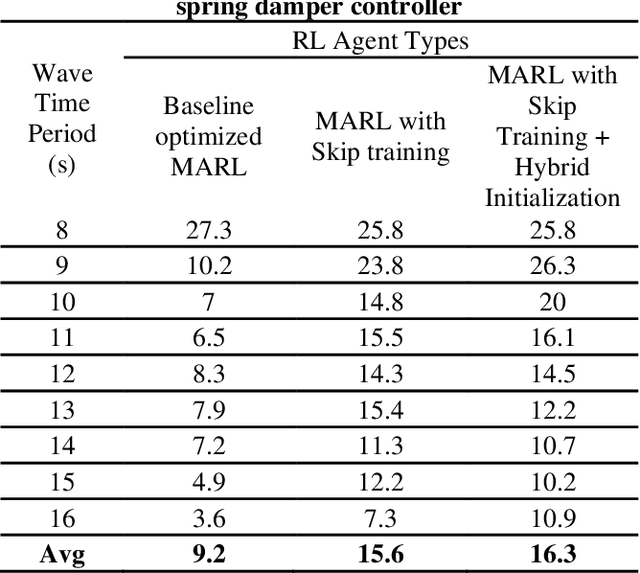

Recent Wave Energy Converters (WEC) are equipped with multiple legs and generators to maximize energy generation. Traditional controllers have shown limitations to capture complex wave patterns and the controllers must efficiently maximize the energy capture. This paper introduces a Multi-Agent Reinforcement Learning controller (MARL), which outperforms the traditionally used spring damper controller. Our initial studies show that the complex nature of problems makes it hard for training to converge. Hence, we propose a novel skip training approach which enables the MARL training to overcome performance saturation and converge to more optimum controllers compared to default MARL training, boosting power generation. We also present another novel hybrid training initialization (STHTI) approach, where the individual agents of the MARL controllers can be initially trained against the baseline Spring Damper (SD) controller individually and then be trained one agent at a time or all together in future iterations to accelerate convergence. We achieved double-digit gains in energy efficiency over the baseline Spring Damper controller with the proposed MARL controllers using the Asynchronous Advantage Actor-Critic (A3C) algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge