Single-trial EEG Discrimination between Wrist and Finger Movement Imagery and Execution in a Sensorimotor BCI

Paper and Code

Aug 26, 2011

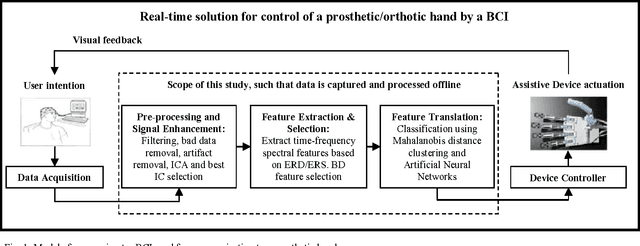

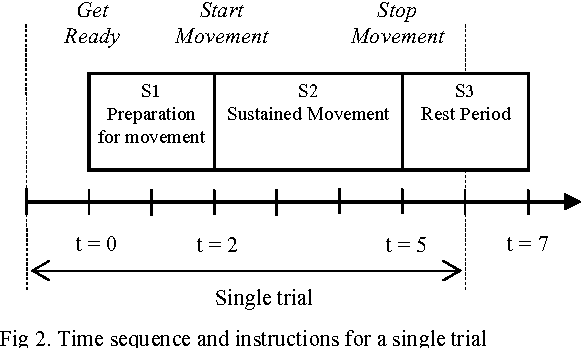

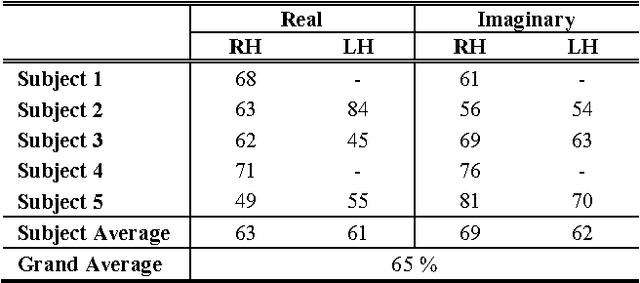

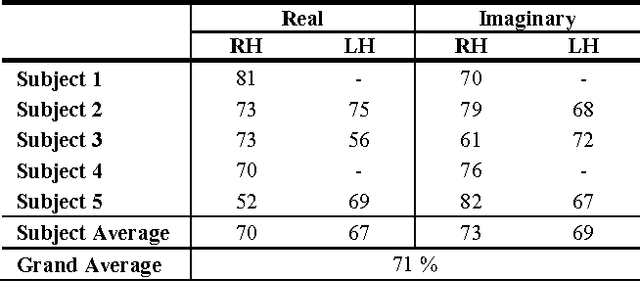

A brain-computer interface (BCI) may be used to control a prosthetic or orthotic hand using neural activity from the brain. The core of this sensorimotor BCI lies in the interpretation of the neural information extracted from electroencephalogram (EEG). It is desired to improve on the interpretation of EEG to allow people with neuromuscular disorders to perform daily activities. This paper investigates the possibility of discriminating between the EEG associated with wrist and finger movements. The EEG was recorded from test subjects as they executed and imagined five essential hand movements using both hands. Independent component analysis (ICA) and time-frequency techniques were used to extract spectral features based on event-related (de)synchronisation (ERD/ERS), while the Bhattacharyya distance (BD) was used for feature reduction. Mahalanobis distance (MD) clustering and artificial neural networks (ANN) were used as classifiers and obtained average accuracies of 65 % and 71 % respectively. This shows that EEG discrimination between wrist and finger movements is possible. The research introduces a new combination of motor tasks to BCI research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge