Simple Adaptive Projection with Pretrained Features for Anomaly Detection

Paper and Code

Dec 05, 2021

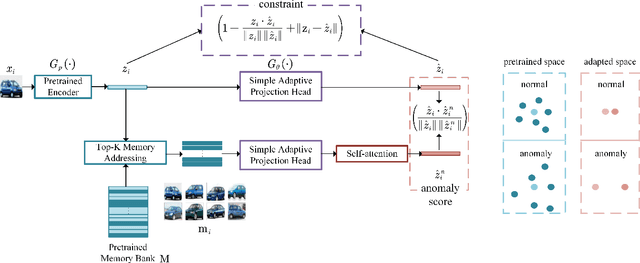

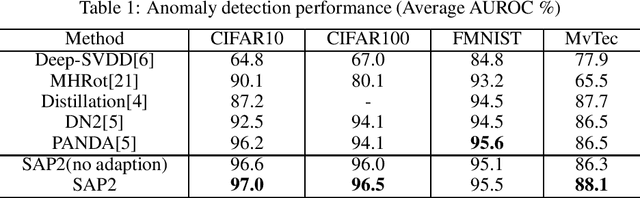

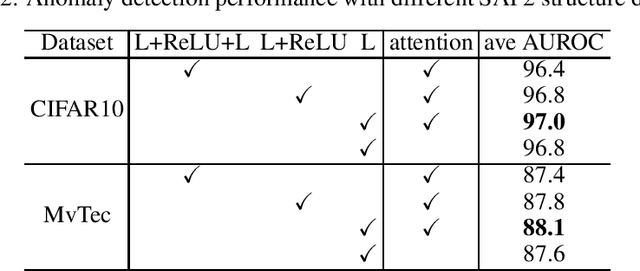

Deep anomaly detection aims to separate anomaly from normal samples with high-quality representations. Pretrained features bring effective representation and promising anomaly detection performance. However, with one-class training data, adapting the pretrained features is a thorny problem. Specifically, the existing optimization objectives with global target often lead to pattern collapse, i.e. all inputs are mapped to the same. In this paper, we propose a novel adaptation framework including simple linear transformation and self-attention. Such adaptation is applied on a specific input, and its k nearest representations of normal samples in pretrained feature space and the inner-relationship between similar one-class semantic features are mined. Furthermore, based on such framework, we propose an effective constraint term to avoid learning trivial solution. Our simple adaptive projection with pretrained features(SAP2) yields a novel anomaly detection criterion which is more accurate and robust to pattern collapse. Our method achieves state-of-the-art anomaly detection performance on semantic anomaly detection and sensory anomaly detection benchmarks including 96.5% AUROC on CIFAR-100 dataset, 97.0% AUROC on CIFAR-10 dataset and 88.1% AUROC on MvTec dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge