Sequential anatomy localization in fetal echocardiography videos

Paper and Code

Oct 28, 2018

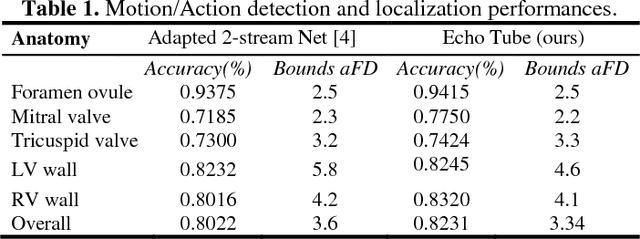

Fetal heart motion is an important diagnostic indicator for structural detection and functional assessment of congenital heart disease. We propose an approach towards integrating deep convolutional and recurrent architectures that utilize localized spatial and temporal features of different anatomical substructures within a global spatiotemporal context for interpretation of fetal echocardiography videos. We formulate our task as a cardiac structure localization problem with convolutional architectures for aggregating global spatial context and detecting anatomical structures on spatial region proposals. This information is aggregated temporally by recurrent architectures to quantify the progressive motion patterns. We experimentally show that the resulting architecture combines anatomical landmark detection at the frame-level over multiple video sequences-with temporal progress of the associated anatomical motions to encode local spatiotemporal fetal heart dynamics and is validated on a real-world clinical dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge