Semantic segmentation of forest stands using deep learning

Paper and Code

Apr 03, 2025

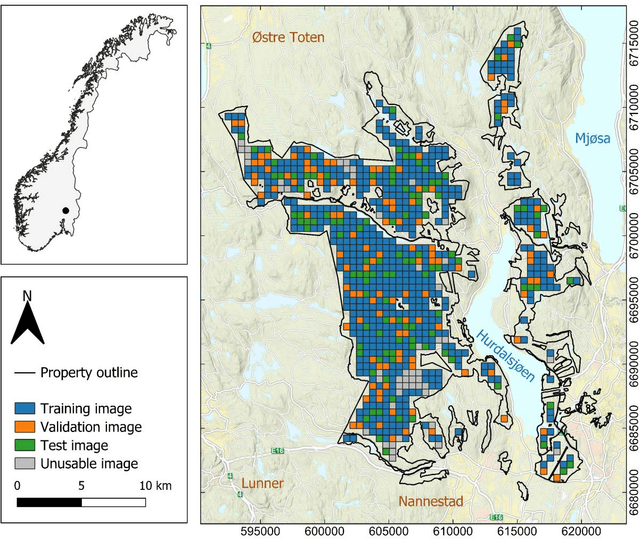

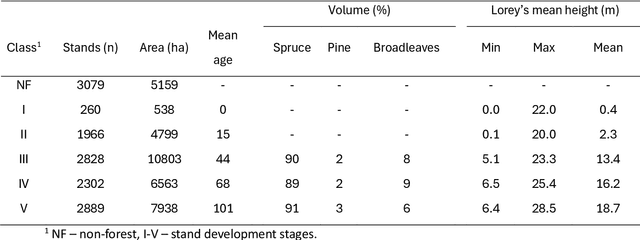

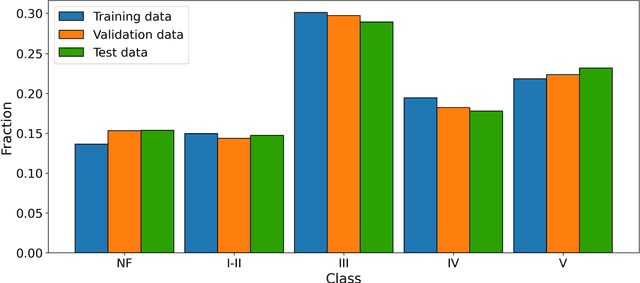

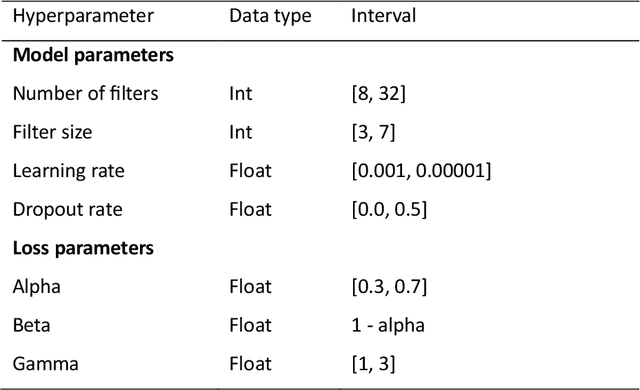

Forest stands are the fundamental units in forest management inventories, silviculture, and financial analysis within operational forestry. Over the past two decades, a common method for mapping stand borders has involved delineation through manual interpretation of stereographic aerial images. This is a time-consuming and subjective process, limiting operational efficiency and introducing inconsistencies. Substantial effort has been devoted to automating the process, using various algorithms together with aerial images and canopy height models constructed from airborne laser scanning (ALS) data, but manual interpretation remains the preferred method. Deep learning (DL) methods have demonstrated great potential in computer vision, yet their application to forest stand delineation remains unexplored in published research. This study presents a novel approach, framing stand delineation as a multiclass segmentation problem and applying a U-Net based DL framework. The model was trained and evaluated using multispectral images, ALS data, and an existing stand map created by an expert interpreter. Performance was assessed on independent data using overall accuracy, a standard metric for classification tasks that measures the proportions of correctly classified pixels. The model achieved an overall accuracy of 0.73. These results demonstrate strong potential for DL in automated stand delineation. However, a few key challenges were noted, especially for complex forest environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge