Semantic Interpretation of Deep Neural Networks Based on Continuous Logic

Paper and Code

Oct 06, 2019

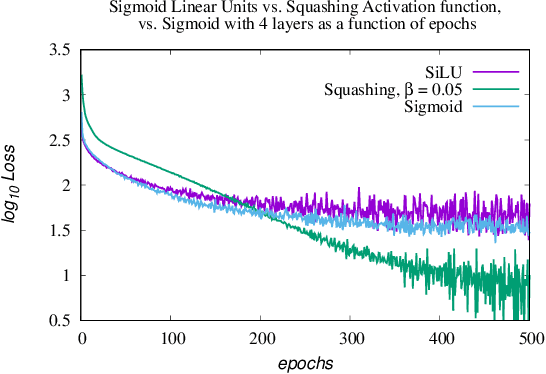

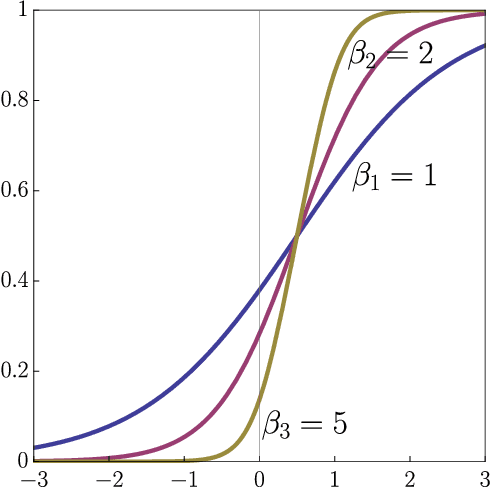

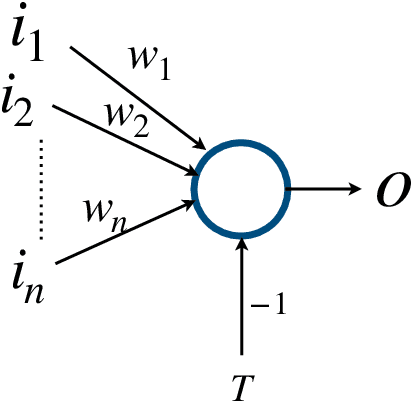

Combining deep neural networks with the concepts of continuous logic is desirable to reduce uninterpretability of neural models. Nilpotent logical systems offer an appropriate mathematical framework to obtain continuous logic based neural networks (CL neural networks). We suggest using a differentiable approximation of the cutting function in the nodes of the input layer as well as in the logical operators in the hidden layers. The first experimental results point towards a promising new approach of machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge