rpcPRF: Generalizable MPI Neural Radiance Field for Satellite Camera

Paper and Code

Oct 11, 2023

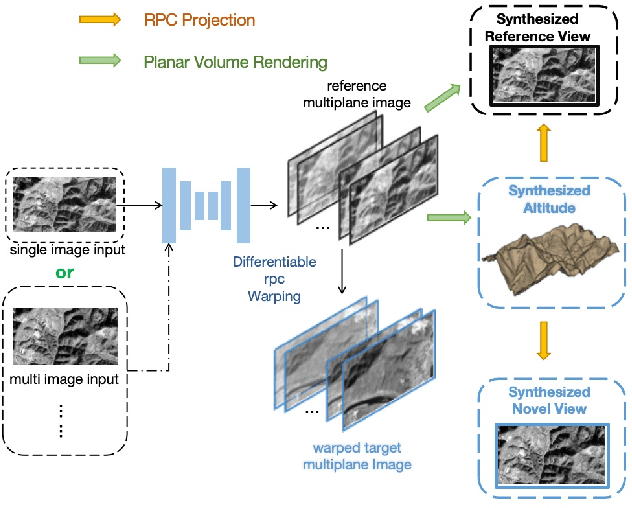

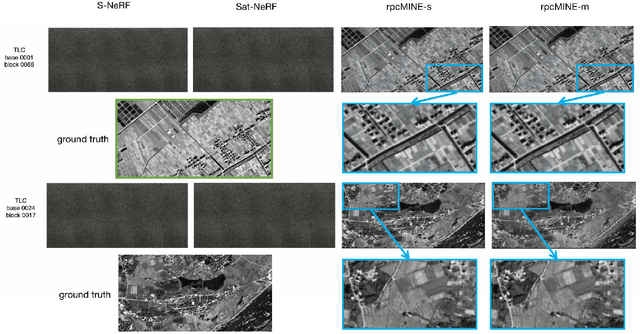

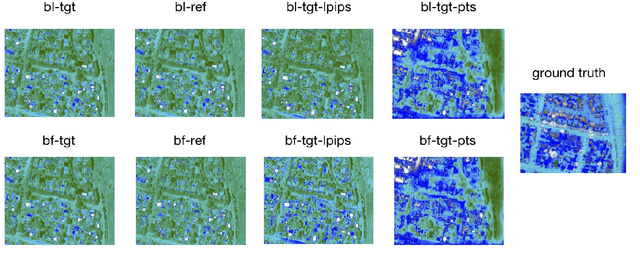

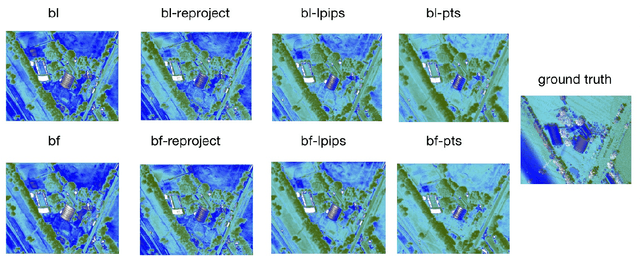

Novel view synthesis of satellite images holds a wide range of practical applications. While recent advances in the Neural Radiance Field have predominantly targeted pin-hole cameras, and models for satellite cameras often demand sufficient input views. This paper presents rpcPRF, a Multiplane Images (MPI) based Planar neural Radiance Field for Rational Polynomial Camera (RPC). Unlike coordinate-based neural radiance fields in need of sufficient views of one scene, our model is applicable to single or few inputs and performs well on images from unseen scenes. To enable generalization across scenes, we propose to use reprojection supervision to induce the predicted MPI to learn the correct geometry between the 3D coordinates and the images. Moreover, we remove the stringent requirement of dense depth supervision from deep multiview-stereo-based methods by introducing rendering techniques of radiance fields. rpcPRF combines the superiority of implicit representations and the advantages of the RPC model, to capture the continuous altitude space while learning the 3D structure. Given an RGB image and its corresponding RPC, the end-to-end model learns to synthesize the novel view with a new RPC and reconstruct the altitude of the scene. When multiple views are provided as inputs, rpcPRF exerts extra supervision provided by the extra views. On the TLC dataset from ZY-3, and the SatMVS3D dataset with urban scenes from WV-3, rpcPRF outperforms state-of-the-art nerf-based methods by a significant margin in terms of image fidelity, reconstruction accuracy, and efficiency, for both single-view and multiview task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge