Robust Wavelet-based Assessment of Scaling with Applications

Paper and Code

Jan 23, 2022

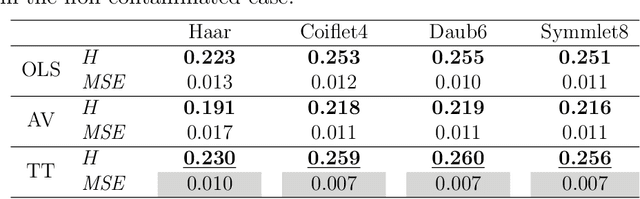

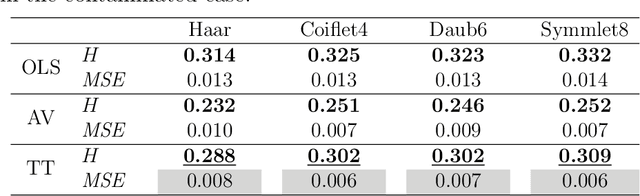

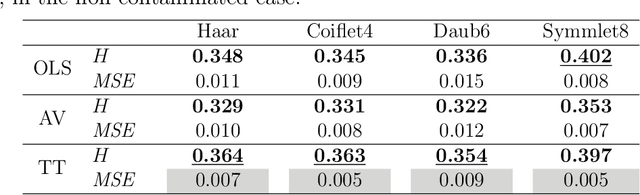

A number of approaches have dealt with statistical assessment of self-similarity, and many of those are based on multiscale concepts. Most rely on certain distributional assumptions which are usually violated by real data traces, often characterized by large temporal or spatial mean level shifts, missing values or extreme observations. A novel, robust approach based on Theil-type weighted regression is proposed for estimating self-similarity in two-dimensional data (images). The method is compared to two traditional estimation techniques that use wavelet decompositions; ordinary least squares (OLS) and Abry-Veitch bias correcting estimator (AV). As an application, the suitability of the self-similarity estimate resulting from the the robust approach is illustrated as a predictive feature in the classification of digitized mammogram images as cancerous or non-cancerous. The diagnostic employed here is based on the properties of image backgrounds, which is typically an unused modality in breast cancer screening. Classification results show nearly 68% accuracy, varying slightly with the choice of wavelet basis, and the range of multiresolution levels used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge