Reweighting samples under covariate shift using a Wasserstein distance criterion

Paper and Code

Oct 19, 2020

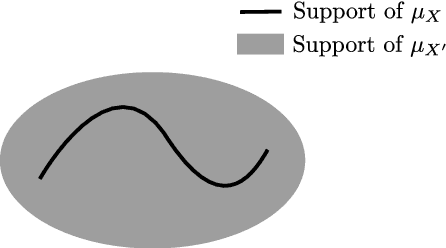

Considering two random variables with different laws to which we only have access through finite size iid samples, we address how to reweight the first sample so that its empirical distribution converges towards the true law of the second sample as the size of both samples goes to infinity. We study an optimal reweighting that minimizes the Wasserstein distance between the empirical measures of the two samples, and leads to an expression of the weights in terms of Nearest Neighbors. The consistency and some asymptotic convergence rates in terms of expected Wasserstein distance are derived, and do not need the assumption of absolute continuity of one random variable with respect to the other. These results have some application in Uncertainty Quantification for decoupled estimation and in the bound of the generalization error for the Nearest Neighbor Regression under covariate shift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge