Reinforcement Learning with Expert Trajectory For Quantitative Trading

Paper and Code

May 09, 2021

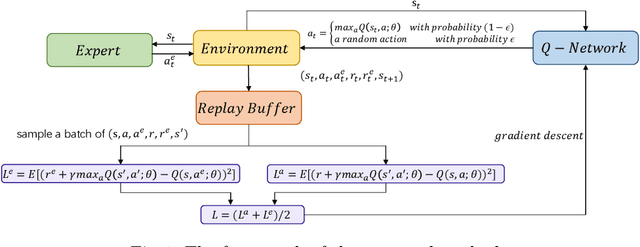

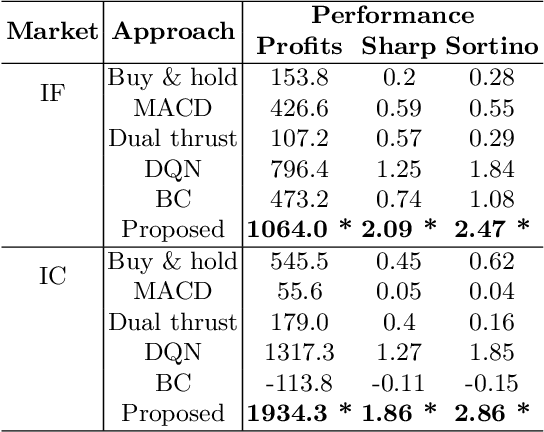

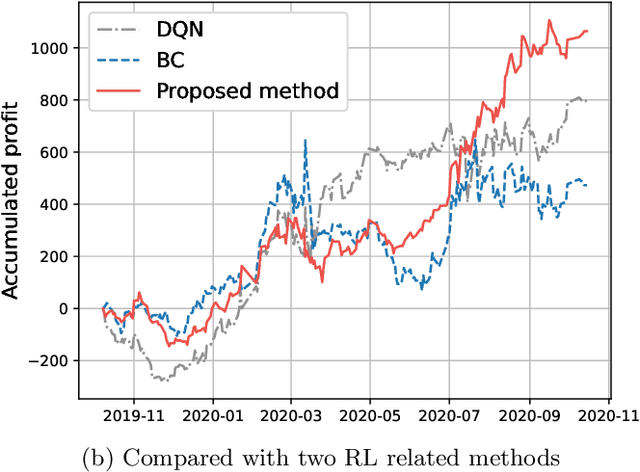

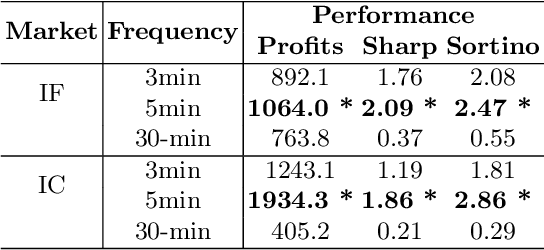

In recent years, quantitative investment methods combined with artificial intelligence have attracted more and more attention from investors and researchers. Existing related methods based on the supervised learning are not very suitable for learning problems with long-term goals and delayed rewards in real futures trading. In this paper, therefore, we model the price prediction problem as a Markov decision process (MDP), and optimize it by reinforcement learning with expert trajectory. In the proposed method, we employ more than 100 short-term alpha factors instead of price, volume and several technical factors in used existing methods to describe the states of MDP. Furthermore, unlike DQN (deep Q-learning) and BC (behavior cloning) in related methods, we introduce expert experience in training stage, and consider both the expert-environment interaction and the agent-environment interaction to design the temporal difference error so that the agents are more adaptable for inevitable noise in financial data. Experimental results evaluated on share price index futures in China, including IF (CSI 300) and IC (CSI 500), show that the advantages of the proposed method compared with three typical technical analysis and two deep leaning based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge