Privacy-preserving Non-negative Matrix Factorization with Outliers

Paper and Code

Nov 11, 2022

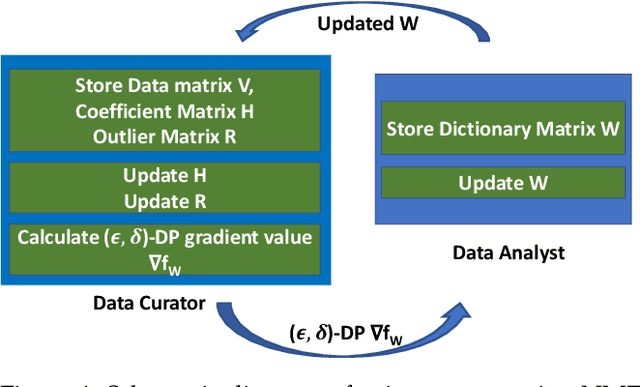

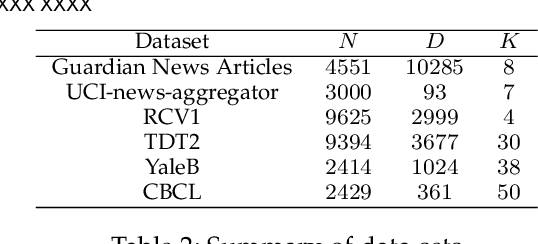

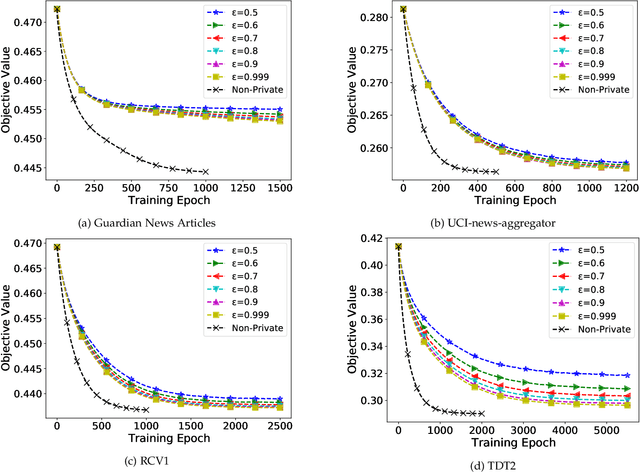

Non-negative matrix factorization is a popular unsupervised machine learning algorithm for extracting meaningful features from data which are inherently non-negative. However, such data sets may often contain privacy-sensitive user data, and therefore, we may need to take necessary steps to ensure the privacy of the users while analyzing the data. In this work, we focus on developing a Non-negative matrix factorization algorithm in the privacy-preserving framework. More specifically, we propose a novel privacy-preserving algorithm for non-negative matrix factorisation capable of operating on private data, while achieving results comparable to those of the non-private algorithm. We design the framework such that one has the control to select the degree of privacy grantee based on the utility gap. We show our proposed framework's performance in six real data sets. The experimental results show that our proposed method can achieve very close performance with the non-private algorithm under some parameter regime, while ensuring strict privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge