Predicting Chaotic System Behavior using Machine Learning Techniques

Paper and Code

Aug 11, 2024

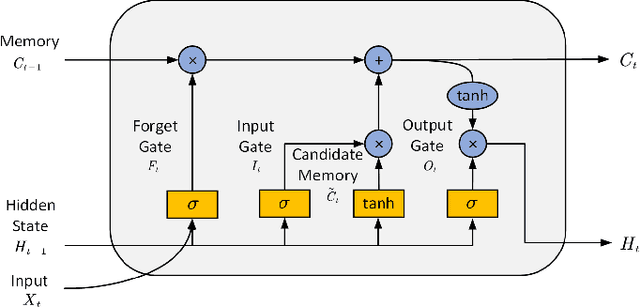

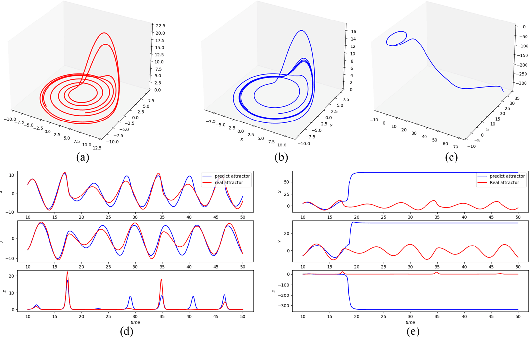

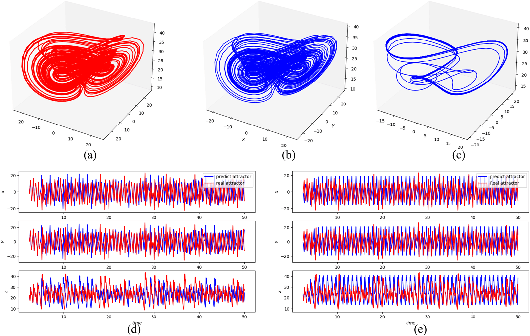

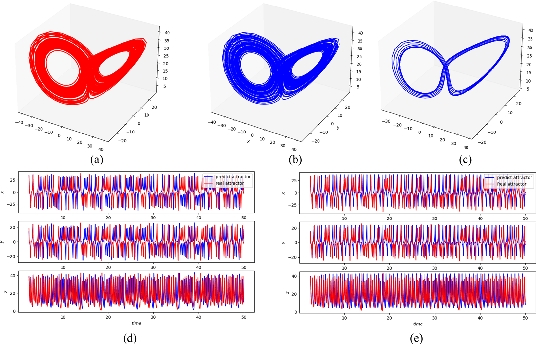

Recently, machine learning techniques, particularly deep learning, have demonstrated superior performance over traditional time series forecasting methods across various applications, including both single-variable and multi-variable predictions. This study aims to investigate the capability of i) Next Generation Reservoir Computing (NG-RC) ii) Reservoir Computing (RC) iii) Long short-term Memory (LSTM) for predicting chaotic system behavior, and to compare their performance in terms of accuracy, efficiency, and robustness. These methods are applied to predict time series obtained from four representative chaotic systems including Lorenz, R\"ossler, Chen, Qi systems. In conclusion, we found that NG-RC is more computationally efficient and offers greater potential for predicting chaotic system behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge