Personalized Neural Embeddings for Collaborative Filtering with Text

Paper and Code

Mar 19, 2019

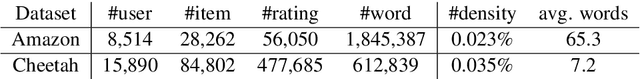

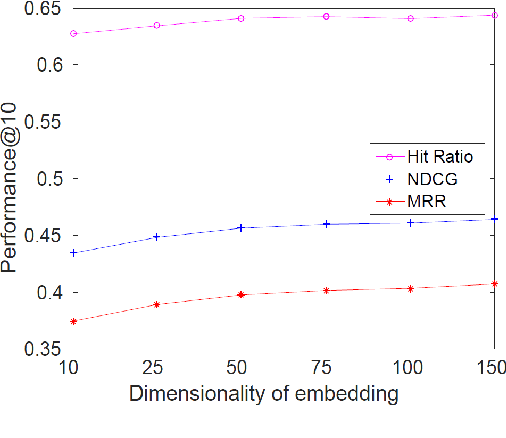

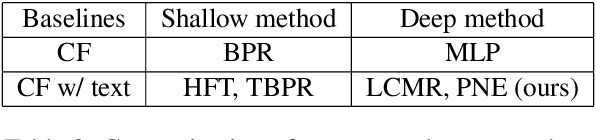

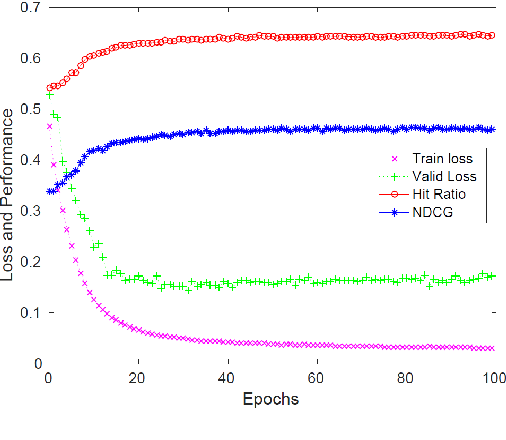

Collaborative filtering (CF) is a core technique for recommender systems. Traditional CF approaches exploit user-item relations (e.g., clicks, likes, and views) only and hence they suffer from the data sparsity issue. Items are usually associated with unstructured text such as article abstracts and product reviews. We develop a Personalized Neural Embedding (PNE) framework to exploit both interactions and words seamlessly. We learn such embeddings of users, items, and words jointly, and predict user preferences on items based on these learned representations. PNE estimates the probability that a user will like an item by two terms---behavior factors and semantic factors. On two real-world datasets, PNE shows better performance than four state-of-the-art baselines in terms of three metrics. We also show that PNE learns meaningful word embeddings by visualization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge