Offline Reinforcement Learning for Safer Blood Glucose Control in People with Type 1 Diabetes

Paper and Code

Apr 07, 2022

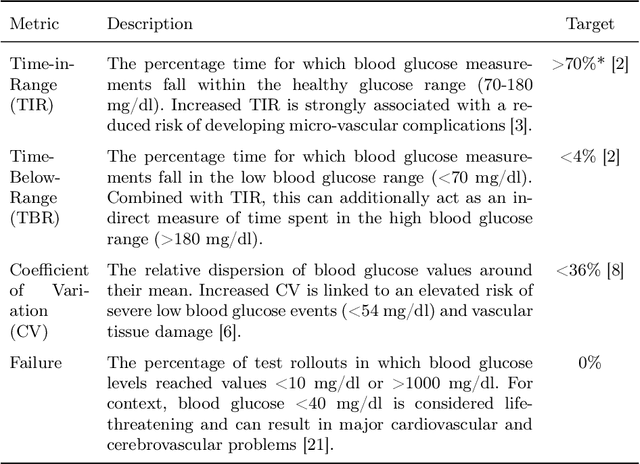

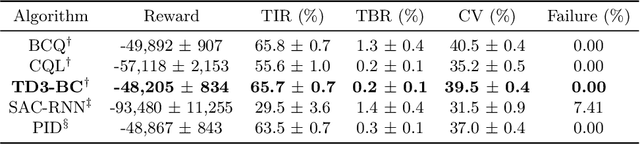

Hybrid closed loop systems represent the future of care for people with type 1 diabetes (T1D). These devices usually utilise simple control algorithms to select the optimal insulin dose for maintaining blood glucose levels within a healthy range. Online reinforcement learning (RL) has been utilised as a method for further enhancing glucose control in these devices. Previous approaches have been shown to reduce patient risk and improve time spent in the target range when compared to classical control algorithms, but are prone to instability in the learning process, often resulting in the selection of unsafe actions. This work presents an evaluation of offline RL as a means for developing clinically effective dosing policies without the need for patient interaction. This paper examines the utility of BCQ, CQL and TD3-BC in managing the blood glucose of nine virtual patients within the UVA/Padova glucose dynamics simulator. When trained on less than a tenth of the data required by online RL approaches, this work shows that offline RL can significantly increase time in the healthy blood glucose range when compared to the strongest state-of-art baseline. This is achieved without any associated increase in low blood glucose events. Offline RL is also shown to be able to correct for common and challenging scenarios such as incorrect bolus dosing, irregular meal timings and sub-optimal training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge