Neural-Symbolic Integration for Interactive Learning and Conceptual Grounding

Paper and Code

Jan 17, 2022

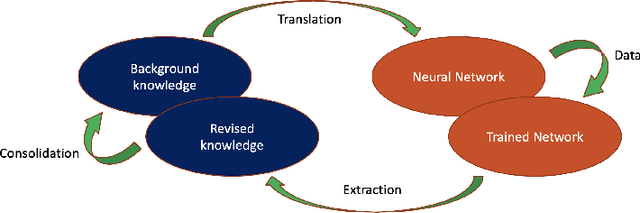

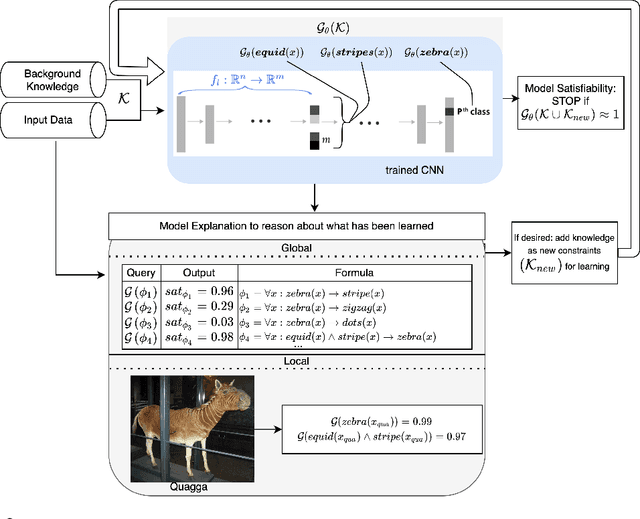

We propose neural-symbolic integration for abstract concept explanation and interactive learning. Neural-symbolic integration and explanation allow users and domain-experts to learn about the data-driven decision making process of large neural models. The models are queried using a symbolic logic language. Interaction with the user then confirms or rejects a revision of the neural model using logic-based constraints that can be distilled into the model architecture. The approach is illustrated using the Logic Tensor Network framework alongside Concept Activation Vectors and applied to a Convolutional Neural Network.

* 1st Workshop on Human and Machine Decisions (WHMD 2021), NeurIPS

2021 * Corrected references

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge