Multi-Relational Hyperbolic Word Embeddings from Natural Language Definitions

Paper and Code

May 12, 2023

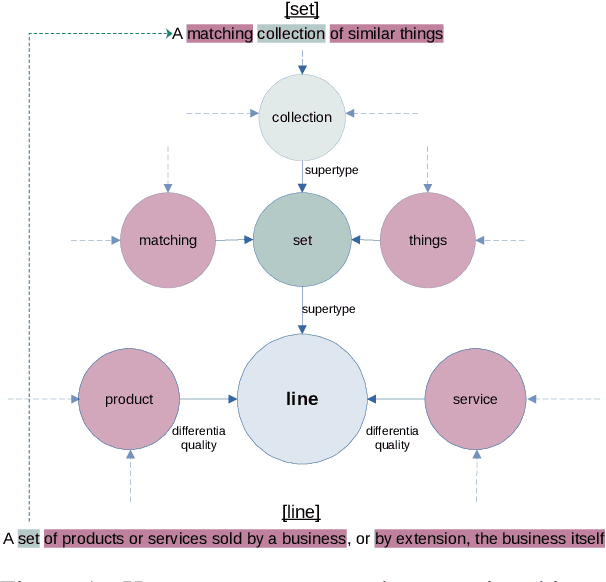

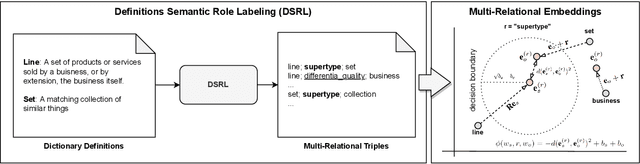

Neural-based word embeddings using solely distributional information have consistently produced useful meaning representations for downstream tasks. However, existing approaches often result in representations that are hard to interpret and control. Natural language definitions, on the other side, possess a recursive, self-explanatory semantic structure that can support novel representation learning paradigms able to preserve explicit conceptual relations and constraints in the vector space. This paper proposes a neuro-symbolic, multi-relational framework to learn word embeddings exclusively from natural language definitions by jointly mapping defined and defining terms along with their corresponding semantic relations. By automatically extracting the relations from definitions corpora and formalising the learning problem via a translational objective, we specialise the framework in hyperbolic space to capture the hierarchical and multi-resolution structure induced by the definitions. An extensive empirical analysis demonstrates that the framework can help impose the desired structural constraints while preserving the mapping required for controllable and interpretable semantic navigation. Moreover, the experiments reveal the superiority of the hyperbolic word embeddings over the euclidean counterparts and demonstrate that the multi-relational framework can obtain competitive results when compared to state-of-the-art neural approaches (including Transformers), with the advantage of being significantly more efficient and intrinsically interpretable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge