Modeling Dual-Exposure Quad-Bayer Patterns for Joint Denoising and Deblurring

Paper and Code

Dec 10, 2024

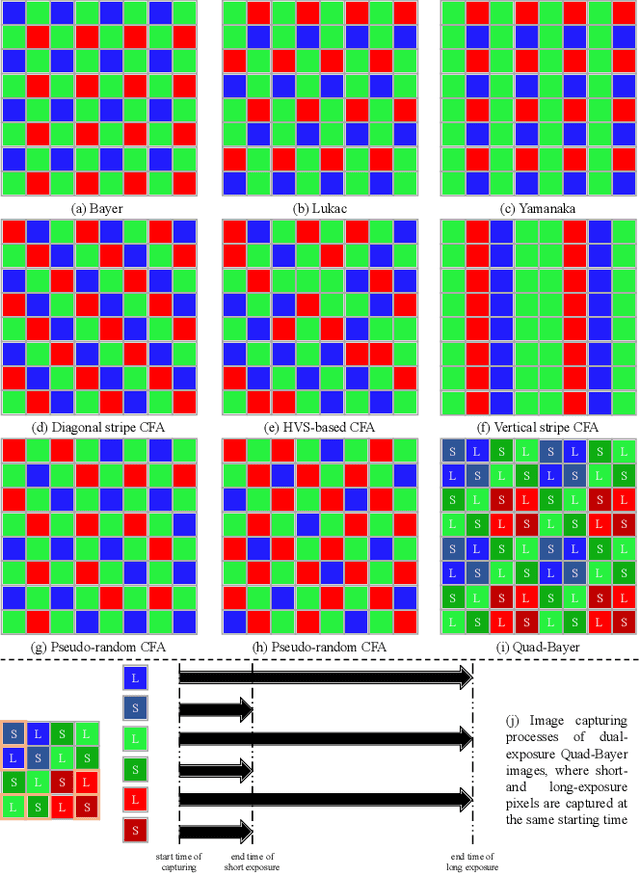

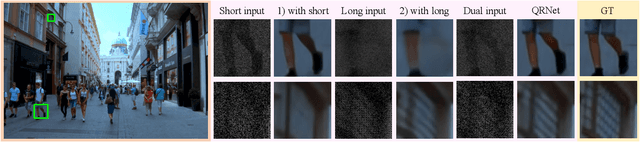

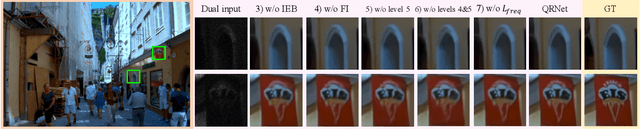

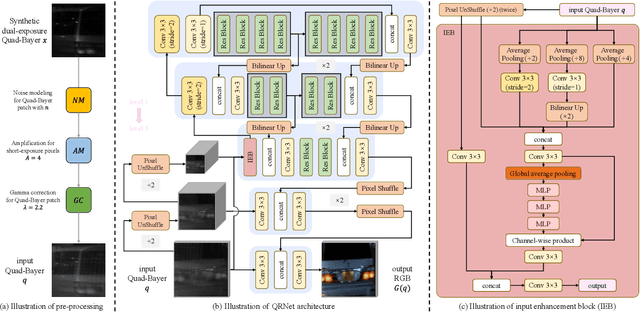

Image degradation caused by noise and blur remains a persistent challenge in imaging systems, stemming from limitations in both hardware and methodology. Single-image solutions face an inherent tradeoff between noise reduction and motion blur. While short exposures can capture clear motion, they suffer from noise amplification. Long exposures reduce noise but introduce blur. Learning-based single-image enhancers tend to be over-smooth due to the limited information. Multi-image solutions using burst mode avoid this tradeoff by capturing more spatial-temporal information but often struggle with misalignment from camera/scene motion. To address these limitations, we propose a physical-model-based image restoration approach leveraging a novel dual-exposure Quad-Bayer pattern sensor. By capturing pairs of short and long exposures at the same starting point but with varying durations, this method integrates complementary noise-blur information within a single image. We further introduce a Quad-Bayer synthesis method (B2QB) to simulate sensor data from Bayer patterns to facilitate training. Based on this dual-exposure sensor model, we design a hierarchical convolutional neural network called QRNet to recover high-quality RGB images. The network incorporates input enhancement blocks and multi-level feature extraction to improve restoration quality. Experiments demonstrate superior performance over state-of-the-art deblurring and denoising methods on both synthetic and real-world datasets. The code, model, and datasets are publicly available at https://github.com/zhaoyuzhi/QRNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge