Mixer Metaphors: audio interfaces for non-musical applications

Paper and Code

Apr 16, 2025

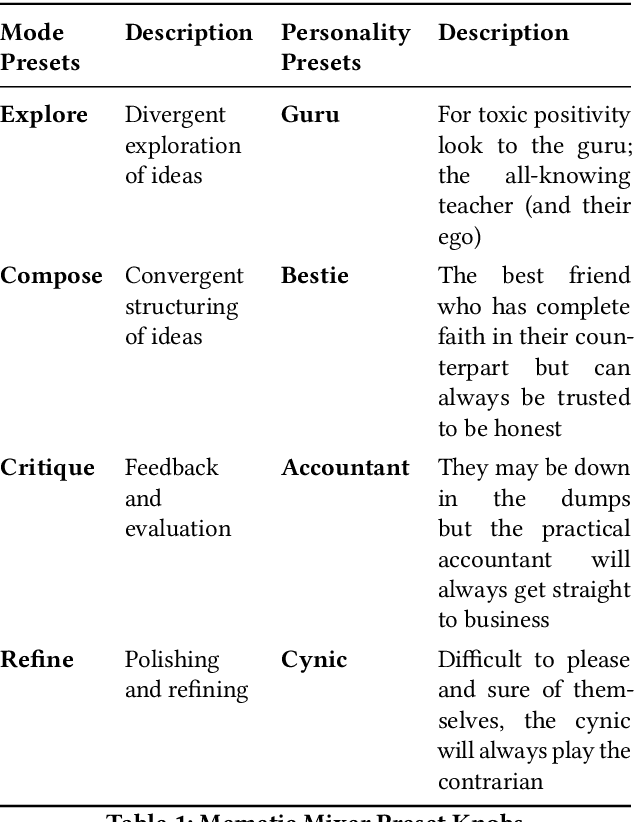

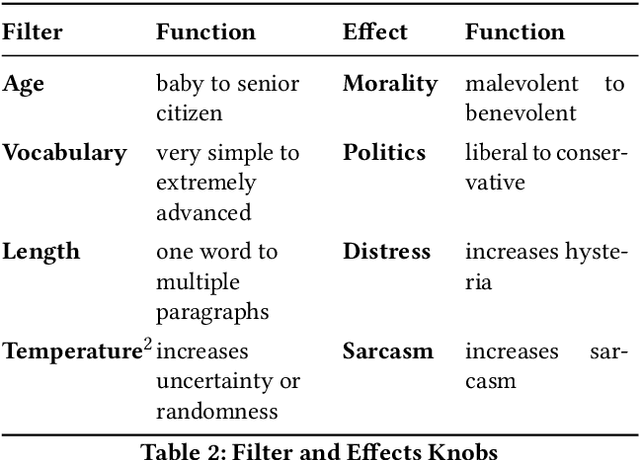

The NIME conference traditionally focuses on interfaces for music and musical expression. In this paper we reverse this tradition to ask, can interfaces developed for music be successfully appropriated to non-musical applications? To help answer this question we designed and developed a new device, which uses interface metaphors borrowed from analogue synthesisers and audio mixing to physically control the intangible aspects of a Large Language Model. We compared two versions of the device, with and without the audio-inspired augmentations, with a group of artists who used each version over a one week period. Our results show that the use of audio-like controls afforded more immediate, direct and embodied control over the LLM, allowing users to creatively experiment and play with the device over its non-mixer counterpart. Our project demonstrates how cross-sensory metaphors can support creative thinking and embodied practice when designing new technological interfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge