Minimalist Regression Network with Reinforced Gradients and Weighted Estimates: a Case Study on Parameters Estimation in Automated Welding

Paper and Code

Jul 05, 2016

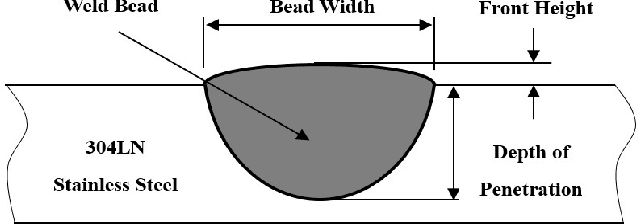

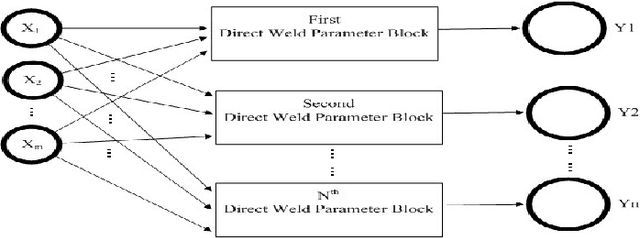

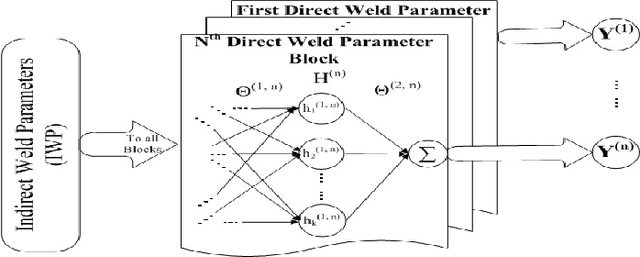

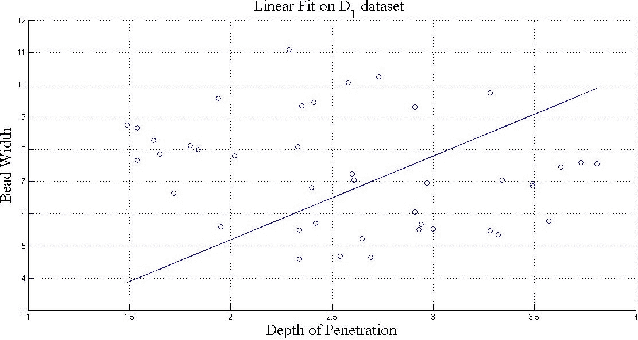

This paper presents a minimalist neural regression network as an aggregate of independent identical regression blocks that are trained simultaneously. Moreover, it introduces a new multiplicative parameter, shared by all the neural units of a given layer, to maintain the quality of its gradients. Furthermore, it increases its estimation accuracy via learning a weight factor whose quantity captures the redundancy between the estimated and actual values at each training iteration. We choose the estimation of the direct weld parameters of different welding techniques to show a significant improvement in calculation of these parameters by our model in contrast to state-of-the-arts techniques in the literature. Furthermore, we demonstrate the ability of our model to retain its performance when presented with combined data of different welding techniques. This is a nontrivial result in attaining an scalable model whose quality of estimation is independent of adopted welding techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge