MicroExpNet: An Extremely Small and Fast Model For Expression Recognition From Frontal Face Images

Paper and Code

Aug 13, 2018

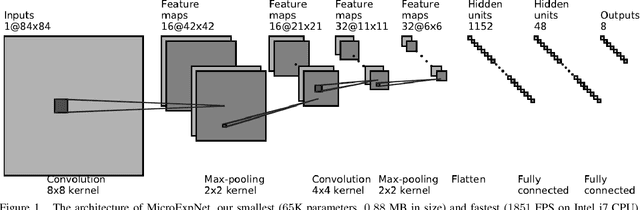

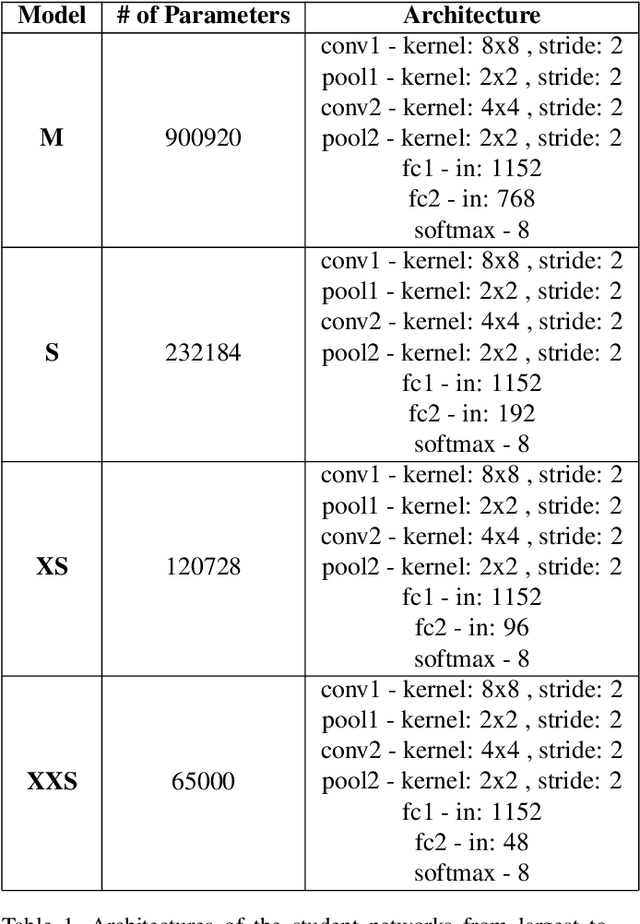

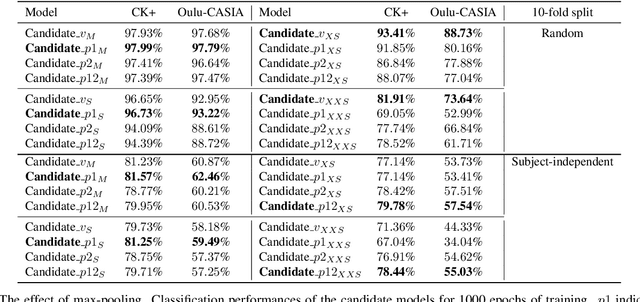

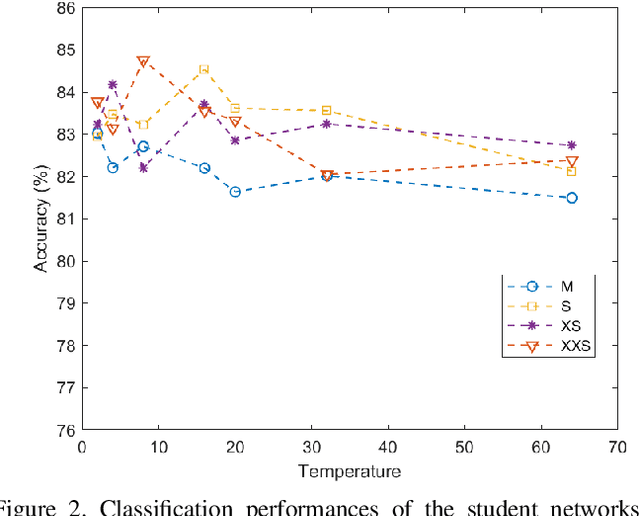

This paper is aimed at creating extremely small and fast convolutional neural networks (CNN) for the problem of facial expression recognition (FER) from frontal face images. We show that, for this problem, translation invariance (achieved through max-pooling layers) degrades performance, especially when the network is small, and that the knowledge distillation method can be used to obtain extremely compressed CNNs. Extensive comparisons are made on two widely-used FER datasets, CK+ and Oulu-CASIA. In addition, our smallest model (MicroExpNet), obtained using knowledge distillation, is less than $1$MB in size and works at 1408 frames per second on an Intel i7 CPU. MicroExpNet performs on part with our largest model on the CK+ and Oulu-CASIA datasets but with 330x fewer parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge