Meteorological Satellite Images Prediction Based on Deep Multi-scales Extrapolation Fusion

Paper and Code

Sep 19, 2022

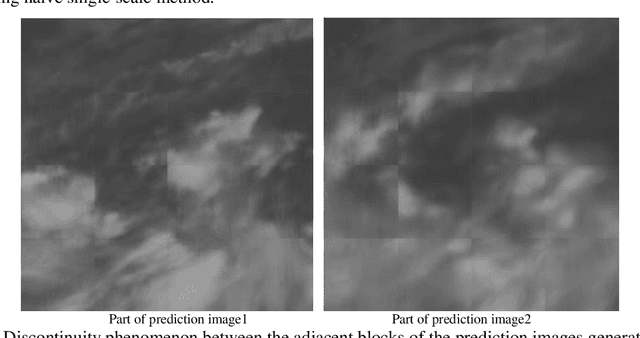

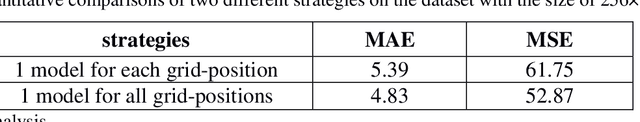

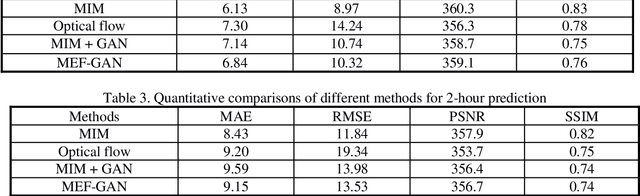

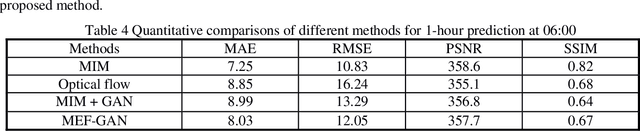

Meteorological satellite imagery is critical for meteorologists. The data have played an important role in monitoring and analyzing weather and climate changes. However, satellite imagery is a kind of observation data and exists a significant time delay when transmitting the data back to Earth. It is important to make accurate predictions for meteorological satellite images, especially the nowcasting prediction up to 2 hours ahead. In recent years, there has been growing interest in the research of nowcasting prediction applications of weather radar images based on deep learning. Compared to the weather radar images prediction problem, the main challenge for meteorological satellite images prediction is the large-scale observation areas and therefore the large sizes of the observation products. Here we present a deep multi-scales extrapolation fusion method, to address the challenge of the meteorological satellite images nowcasting prediction. First, we downsample the original satellite images dataset with large size to several images datasets with smaller resolutions, then we use a deep spatiotemporal sequences prediction method to generate the multi-scales prediction images with different resolutions separately. Second, we fuse the multi-scales prediction results to the targeting prediction images with the original size by a conditional generative adversarial network. The experiments based on the FY-4A meteorological satellite data show that the proposed method can generate realistic prediction images that effectively capture the evolutions of the weather systems in detail. We believe that the general idea of this work can be potentially applied to other spatiotemporal sequence prediction tasks with a large size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge