Localized Perturbations For Weakly-Supervised Segmentation of Glioma Brain Tumours

Paper and Code

Nov 29, 2021

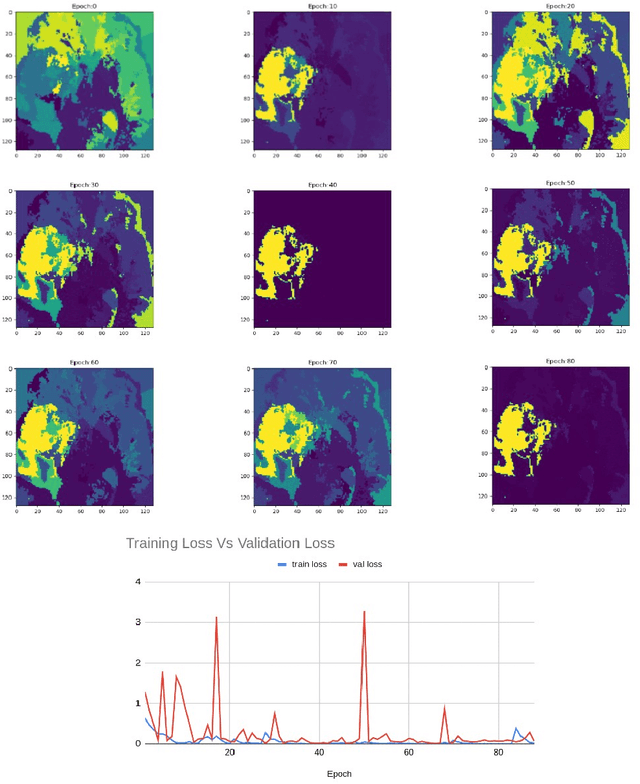

Deep convolutional neural networks (CNNs) have become an essential tool in the medical imaging-based computer-aided diagnostic pipeline. However, training accurate and reliable CNNs requires large fine-grain annotated datasets. To alleviate this, weakly-supervised methods can be used to obtain local information from global labels. This work proposes the use of localized perturbations as a weakly-supervised solution to extract segmentation masks of brain tumours from a pretrained 3D classification model. Furthermore, we propose a novel optimal perturbation method that exploits 3D superpixels to find the most relevant area for a given classification using a U-net architecture. Our method achieved a Dice similarity coefficient (DSC) of 0.44 when compared with expert annotations. When compared against Grad-CAM, our method outperformed both in visualization and localization ability of the tumour region, with Grad-CAM only achieving 0.11 average DSC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge