Learning the Noise of Failure: Intelligent System Tests for Robots

Paper and Code

Feb 16, 2021

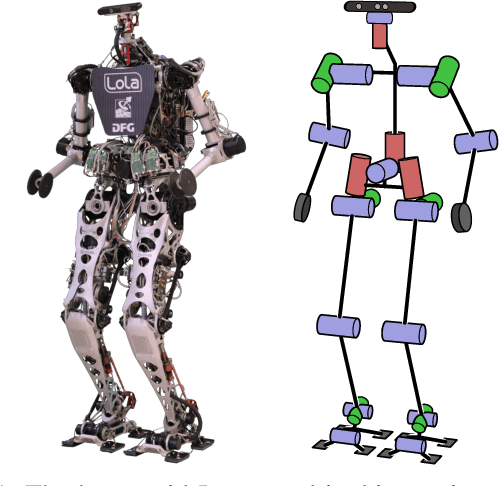

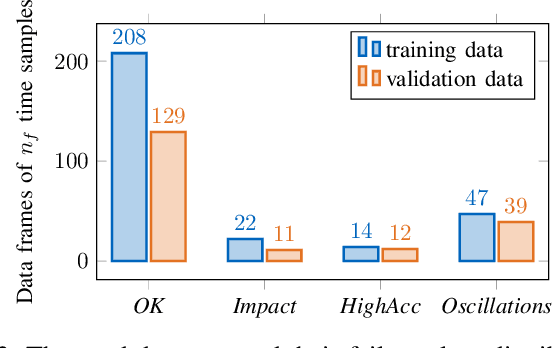

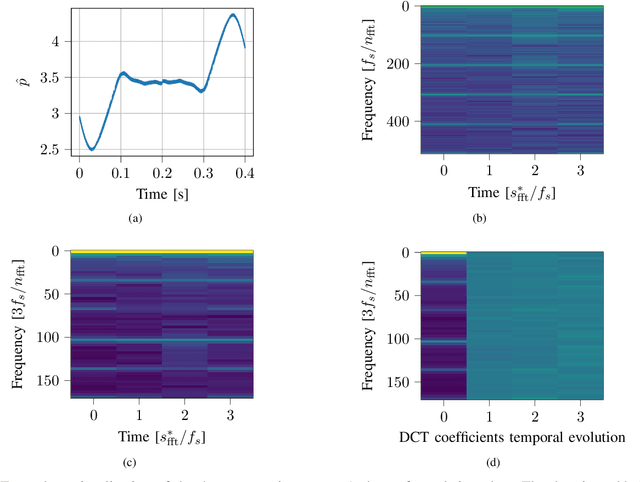

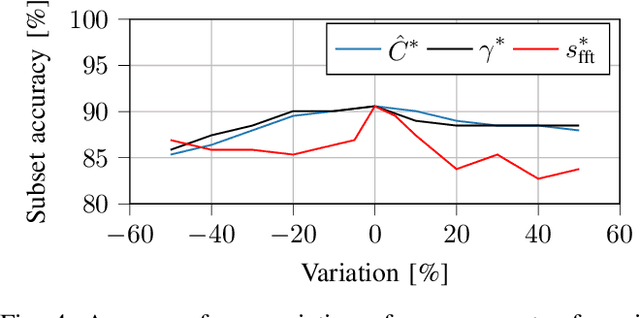

Roboticists usually test new control software in simulation environments before evaluating its functionality on real-world robots. Simulations reduce the risk of damaging the hardware and can significantly increase the development process's efficiency in the form of automated system tests. However, many flaws in the software remain undetected in simulation data, revealing their harmful effects on the system only in time-consuming experiments. In reality, such irregularities are often easily recognized solely by the robot's airborne noise during operation. We propose a simulated noise estimate for the detection of failures in automated system tests of robots. The classification of flaws uses classical machine learning - a support vector machine - to identify different failure classes from the scalar noise estimate. The methodology is evaluated on simulation data from the humanoid robot LOLA. The approach yields high failure detection accuracy with a low false-positive rate, enabling its use for stricter automated system tests. Results indicate that a single trained model may work for different robots. The proposed technique is provided to the community in the form of the open-source tool NoisyTest, making it easy to test data from any robot. In a broader scope, the technique may empower real-world automated system tests without human evaluation of success or failure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge