Latent space conditioning for improved classification and anomaly detection

Paper and Code

Nov 28, 2019

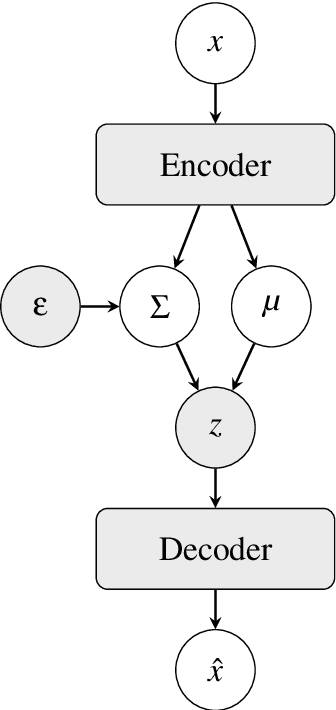

We propose a new type of variational autoencoder to perform improved pre-processing for clustering and anomaly detection on data with a given label. Anomalies however are not known or labeled. We call our method conditional latent space variational autonencoder since it separates the latent space by conditioning on information within the data. The method fits one prior distribution to each class in the dataset, effectively expanding the prior distribution to include a Gaussian mixture model. Our approach is compared against the capabilities of a typical variational autoencoder by measuring their V-score during cluster formation with respect to the k-means and EM algorithms. For anomaly detection, we use a new metric composed of the mass-volume and excess-mass curves which can work in an unsupervised setting. We compare the results between established methods such as as isolation forest, local outlier factor and one-class support vector machine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge