Kaggle Kinship Recognition Challenge: Introduction of Convolution-Free Model to boost conventional

Paper and Code

Jun 11, 2022

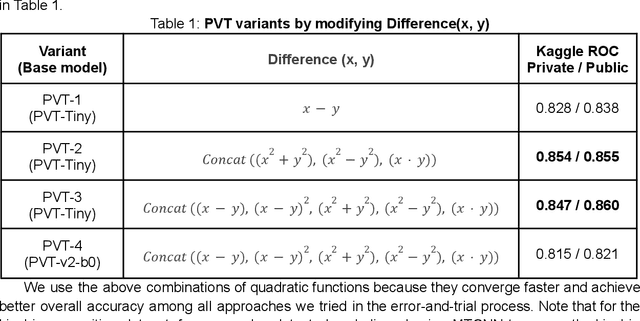

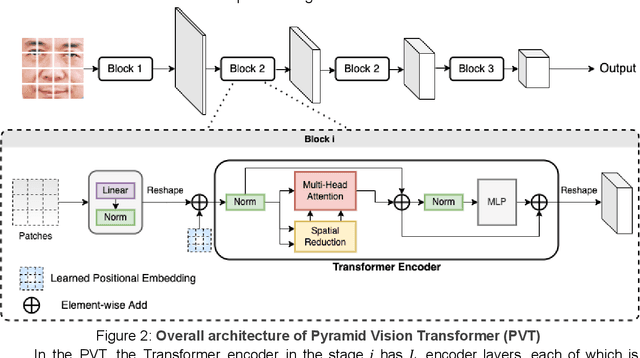

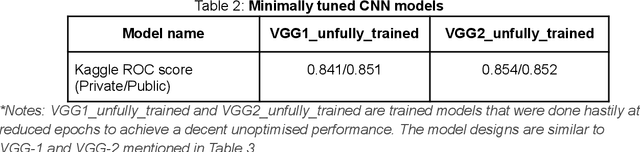

This work aims to explore a convolution-free base classifier that can be used to widen the variations of the conventional ensemble classifier. Specifically, we propose Vision Transformers as base classifiers to combine with CNNs for a unique ensemble solution in Kaggle kinship recognition. In this paper, we verify our proposed idea by implementing and optimizing variants of the Vision Transformer model on top of the existing CNN models. The combined models achieve better scores than conventional ensemble classifiers based solely on CNN variants. We demonstrate that highly optimized CNN ensembles publicly available on the Kaggle Discussion board can easily achieve a significant boost in ROC score by simply ensemble with variants of the Vision Transformer model due to low correlation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge